Thanks. I tried by adding these lines:

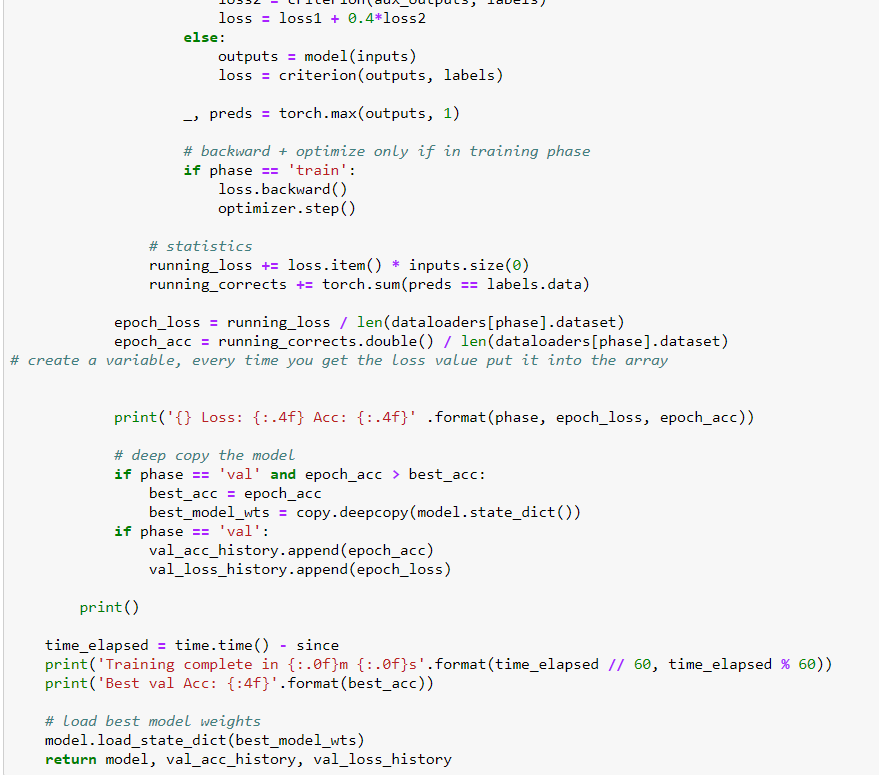

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

if phase == 'val':

val_acc_history.append(epoch_acc)

val_acc_history.append(epoch_loss)

elif phase == 'train':

train_acc_history.append(epoch_acc)

train_acc_history.append(epoch_loss)

....

....

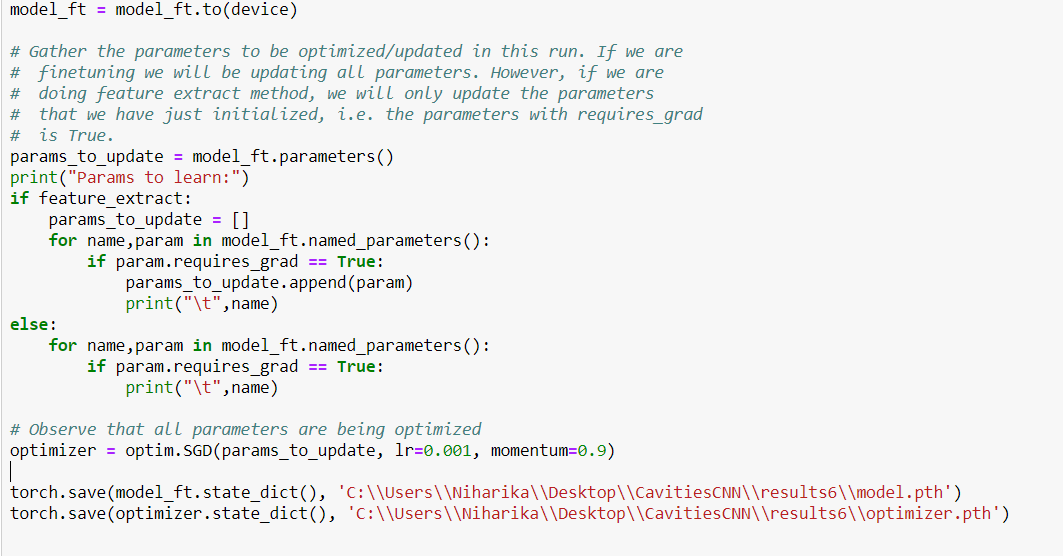

model_ft, hist = train_model(model_ft, dataloaders_dict, criterion, optimizer_ft, num_epochs=num_epochs, is_inception=(model_name=="inception"))

torch.save(model_ft.state_dict(), 'googlenet/standard_googlenet.pth')

ohist = []

#shist = []

ohist = [h.cpu.numpy() for h in hist]

#shist = [h.cpu().numpy() for h in train_model.epoch_loss]

plt.title("Loss&Acc Vs. Number of Training Epochs")

plt.xlabel(" Epochs")

plt.ylabel("Accuracy & Loss")

plt.plot(range(1,num_epochs+1),ohist,label="Training Accuracy")

plt.plot(range(1,num_epochs+1),ohist,label="Training Loss")

plt.plot(range(1,num_epochs+1),ohist,label="Validation Accuracy")

plt.plot(range(1,num_epochs+1),ohist,label="Validation Loss")

plt.ylim((0,1.))

plt.xticks(np.arange(1, num_epochs+1, 1.0))

plt.legend()

plt.savefig('plots.png')

plt.show()

but it throws an error:

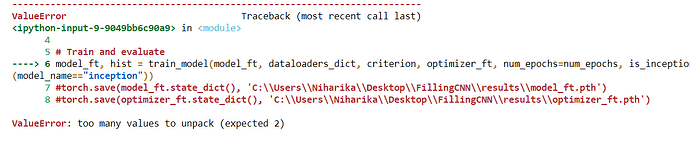

Traceback (most recent call last):

File "ftune.py", line 284, in <module>

model_ft, hist = train_model(model_ft, dataloaders_dict, criterion, optimizer_ft, num_epochs=num_epochs, is_inception=(model_name=="inception"))

ValueError: too many values to unpack (expected 2)

I tried also TensorboardX, like these snippets:

from tensorboardX import SummaryWriter

writer = SummaryWriter('runs')

...

...

def train_model(train_loader, model, criterion, optimizer, epoch):

if i % args.print_freq == 0:

print('Epoch: [{0}][{1}/{2}]\t'

'Time {batch_time.val:.3f} ({batch_time.avg:.3f})\t'

'Data {data_time.val:.3f} ({data_time.avg:.3f})\t'

'Loss {loss.val:.4f} ({loss.avg:.4f})\t'

'Prec@1 {top1.val:.3f} ({top1.avg:.3f})\t'

'Prec@5 {top5.val:.3f} ({top5.avg:.3f})'.format(

epoch, i, len(train_loader), batch_time=batch_time,

data_time=data_time, loss=losses, top1=top1, top5=top5))

niter = (epoch * len(train_loader))+i

writer.add_scalar('Train/Loss', losses.val, niter)

writer.add_scalar('Train/Prec@1', top1.val, niter)

writer.add_scalar('Train/Prec@5', top5.val, niter)

def validate(val_loader, model, criterion):

if i % args.print_freq == 0:

print('Test: [{0}/{1}]\t'

'Time {batch_time.val:.3f} ({batch_time.avg:.3f})\t'

'Loss {loss.val:.4f} ({loss.avg:.4f})\t'

'Prec@1 {top1.val:.3f} ({top1.avg:.3f})\t'

'Prec@5 {top5.val:.3f} ({top5.avg:.3f})'.format(

i, len(val_loader), batch_time=batch_time, loss=losses,

top1=top1, top5=top5))

niter = epoch*len(train_loader)+i

writer.add_scalar('Test/Loss', losses.val, niter)

writer.add_scalar('Test/Prec@1', top1.val, niter)

writer.add_scalar('Test/Prec@5', top5.val, niter)

but in validate function it throws an error like epoch is not defined etc.

could you share some snippets for TensorboardX?