bluesky314

November 25, 2018, 6:51pm

1

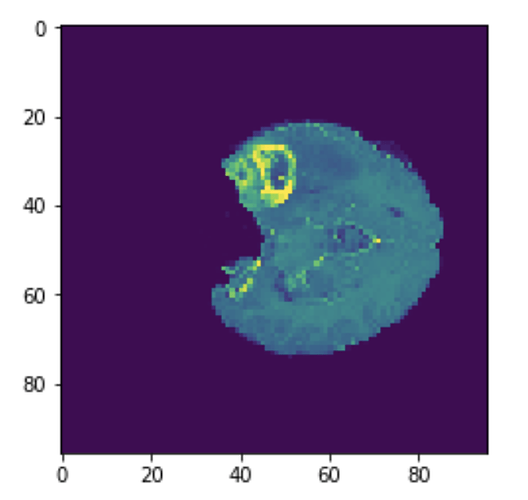

I have a stack of MRI images that look like

say in shape [train_size, h,w,d]

There is alot of black space making my convolution very heavy so I want to trim the images from the minimum index of the first non-zero pixel value from all the 4 corners. i.e identify the maximum distance I can trim my images from the four corners without losing any non-zero values pixel from any of the images in whole training size. This is only for the height and width. How Could I go about doing this?

ptrblck

November 26, 2018, 12:23am

2

This code should work for a [batch, channel, height, width]-shaped tensor:

x = torch.zeros(1, 3, 24, 24)

x[0, :, 5:10, 6:11] = torch.randn(1, 3, 5, 5)

non_zero = (x!=0).nonzero() # Contains non-zero indices for all 4 dims

h_min = non_zero[:, 2].min()

h_max = non_zero[:, 2].max()

w_min = non_zero[:, 3].min()

w_max = non_zero[:, 3].max()

jphoward

October 17, 2019, 1:06pm

3

@ptrblck this solution gives the min and max nonzero cell across all images in the batch, which is unlikely to be the desired behavior.

I’m currently trying to solve the same problem, and so far haven’t found anything that can be done without a loop.

That’s right and I think it would be the only way to keep the batch intact, wouldn’t it?

Let me know, if I’m missing something.

jphoward

October 26, 2019, 2:14pm

5

Yup @ptrblck you are missing something

I’ve figured out how to do this now so I can show you! Here’s how I did the batchwise nonzero thing:

c = x.sum(dim).nonzero().cpu()

idxs,vals = torch.unique(c[:,0],return_counts=True)

vs = torch.split_with_sizes(c[:,1],tuple(vals))

d = {k.item():v for k,v in zip(idxs,vs)}

default_u = tensor([0,x.shape[-1]-1])

b = [d.get(o,default_u) for o in range(x.shape[0])]

b = [tensor([o.min(),o.max()]) for o in b]

return torch.stack(b)

#Cell

def mask2bbox(mask):

no_batch = mask.dim()==2

if no_batch: mask = mask[None]

bb1 = _px_bounds(mask,-1).t()

bb2 = _px_bounds(mask,-2).t()

res = torch.stack([bb1,bb2],dim=1).to(mask.device)

return res[...,0] if no_batch else res

#Cell

def _bbs2sizes(crops, init_sz, use_square=True):

bb = crops.flip(1)

And here’s how I then use that to create cropped zoomed sections from a batch:

def _bbs2sizes(crops, init_sz, use_square=True):

bb = crops.flip(1)

szs = (bb[1]-bb[0])

if use_square: szs = szs.max(0)[0][None].repeat((2,1))

overs = (szs+bb[0])>init_sz

bb[0][overs] = init_sz-szs[overs]

lows = (bb[0]/float(init_sz))

return lows,szs/float(init_sz)

#Cell

def crop_resize(x, crops, new_sz):

# NB assumes square inputs. Not tested for non-square anythings!

bs = x.shape[0]

lows,szs = _bbs2sizes(crops, x.shape[-1])

if not isinstance(new_sz,(list,tuple)): new_sz = (new_sz,new_sz)

id_mat = tensor([[1.,0,0],[0,1,0]])[None].repeat((bs,1,1)).to(x.device)

with warnings.catch_warnings():

warnings.filterwarnings('ignore', category=UserWarning)

sp = F.affine_grid(id_mat, (bs,1,*new_sz))+1.

grid = sp*unsqueeze(szs.t(),1,n=2)+unsqueeze(lows.t()*2.,1,n=2)

return F.grid_sample(x.unsqueeze(1), grid-1)

As you see, the trick is to use grid_sample to resize them all to the same size after the crop. This is a nice way to do things like RandomResizeCrop on the GPU.

1 Like

Thanks for sharing!

jphoward

October 26, 2019, 6:38pm

7

Yeah my preprocessing overall is 150x faster on CUDA, and I think that’s nearly all in these two methods.

1 Like

tshadley

October 18, 2020, 6:57pm

8

Updated github link to this solution:

p = x.windowed(*window)

if remove_max: p[p==1] = 0

return gauss_blur2d(p, s=sigma*x.shape[-1])>thresh

# Cell

@patch

def mask_from_blur(x:DcmDataset, window, sigma=0.3, thresh=0.05, remove_max=True):

return to_device(x.scaled_px).mask_from_blur(window, sigma, thresh, remove_max=remove_max)

# Cell

def _px_bounds(x, dim):

c = x.sum(dim).nonzero().cpu()

idxs,vals = torch.unique(c[:,0],return_counts=True)

vs = torch.split_with_sizes(c[:,1],tuple(vals))

d = {k.item():v for k,v in zip(idxs,vs)}

default_u = tensor([0,x.shape[-1]-1])

b = [d.get(o,default_u) for o in range(x.shape[0])]

b = [tensor([o.min(),o.max()]) for o in b]

return torch.stack(b)

# Cell

1 Like