Also during Conversion, I am getting the below warnings:

TracerWarning: Converting a tensor to a Python index might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

for j, i in product(range(conv_h), range(conv_w)):

TracerWarning: torch.tensor results are registered as constants in the trace. You can safely ignore this warning if you use this function to create tensors out of constant variables that would be the same every time you call this function. In any other case, this might cause the trace to be incorrect.

self.anchors = torch.tensor(self.anchors, device=outs[0].device).reshape(-1, 4)

TracerWarning: Converting a tensor to a Python float might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

self.anchors = torch.tensor(self.anchors, device=outs[0].device).reshape(-1, 4)

and the warnings are refering to this line in the Code:

` self.anchors = torch.tensor(self.anchors, device=outs[0].device).reshape(-1, 4)`

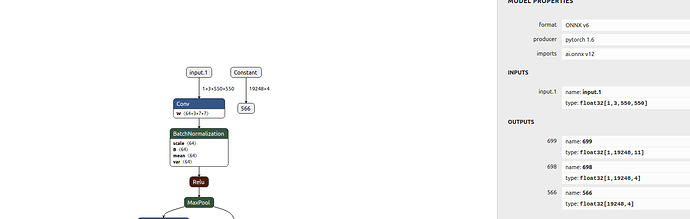

the detail forward function is attached below:

def forward(self, img, box_classes=None, masks_gt=None):

outs = self.backbone(img)

outs = self.fpn(outs[1:4])

if isinstance(self.anchors, list):

for i, shape in enumerate([list(aa.shape) for aa in outs]):

self.anchors += make_anchors(self.cfg, shape[2], shape[3], self.cfg.scales[i])

self.anchors = torch.tensor(self.anchors, device=outs[0].device).reshape(-1, 4)

# outs[0]: [2, 256, 69, 69], the feature map from P3

# proto_out = self.proto_net(outs[0]) # proto_out: (n, 32, 138, 138)

# proto_out = F.relu(proto_out, inplace=True)

# proto_out = proto_out.permute(0, 2, 3, 1).contiguous()

class_pred, box_pred = [], []

for aa in outs:

class_p, box_p= self.prediction_layers[0](aa)

class_pred.append(class_p)

box_pred.append(box_p)

#coef_pred.append(coef_p)

class_pred = torch.cat(class_pred, dim=1)

box_pred = torch.cat(box_pred, dim=1)

#coef_pred = torch.cat(coef_pred, dim=1)

if self.training:

#seg_pred = self.semantic_seg_conv(outs[0])

return self.compute_loss(class_pred, box_pred, box_classes)

else:

class_pred = F.softmax(class_pred, -1)

return class_pred, box_pred,self.anchors# coef_pred, proto_out,