Hello all,

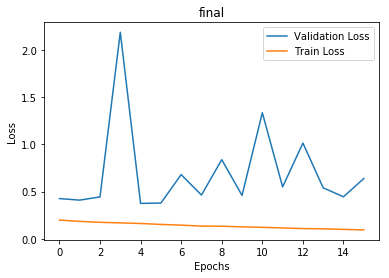

I am having problems with my validation accuracy and loss.

Although my train set keep getting higher accuracy through the epochs my validation accuracy is unstable. I am using pretrained DenseNet-121 model for my binary classification with train set containing 50000 images and 10000 images for validation.

I have only changed output number of classifier of the model.

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.003)

batch_size = 16

num_epochs = 16

I couldn’t figure out the problem. Am I overfitting here or is there something wrong with my epoch number or batch size? O should I look into more tuning parameters of optimizer etc.?

Thanks! ![]()