Hello all.

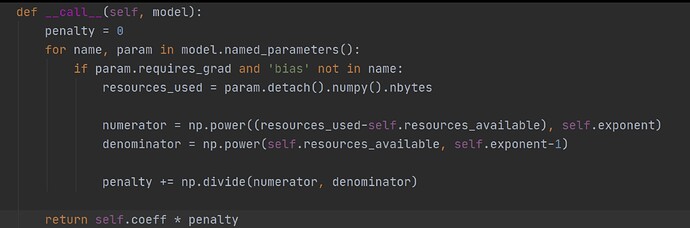

Below is a screenshot of a custom regularizer I have implemented.

The goal of this regularizer is to minimize the total number of space taken by the weights rather than affect the value of any one weight. However by the nature of a regularizer, I require a formulation for my regularizer that is weight dependent, since otherwise backdrop won’t take it into account.

Essentially, if there are too many weights, it should push all weights to zero equally, and if there are too few weights, push the value of the weights to go up.

My current formulation works but is not weight dependent. I have the function that would push towards the points intended given the number of bytes use and number of bytes available, but as said, it’s weight independent.

I appreciate any input.

PS: While I use numpy operations here, I am aware I need to use torch operations on tensors so as to remain in the computation graph. The problem is that resources_used remains a scalar, and the computations remain weight independent.