I am trying to build a next word prediction model with bidirectional LSTM using

AdaptiveLogSoftmaxWithLoss and Adam optimizer. However, after processing 40 batches of data, I’m getting free(): invalid pointer, Aborted (core dumped). The code runs fine after removing the loss.backward() function. So, I decided that the error had to do something with that line. I ran the code in gdp and here’s the trace.

#0 __GI_raise (sig=sig@entry=6) at ../sysdeps/unix/sysv/linux/raise.c:50

#1 0x00007ffff7de5859 in __GI_abort () at abort.c:79

#2 0x00007ffff7e503ee in __libc_message (action=action@entry=do_abort,

fmt=fmt@entry=0x7ffff7f7a285 "%s\n") at ../sysdeps/posix/libc_fatal.c:155

#3 0x00007ffff7e5847c in malloc_printerr (

str=str@entry=0x7ffff7f7c9f8 "malloc(): memory corruption (fast)")

at malloc.c:5347

#4 0x00007ffff7e5b5bc in _int_malloc (av=av@entry=0x7ffe7c000020,

bytes=bytes@entry=32) at malloc.c:3594

#5 0x00007ffff7e5ed15 in __libc_calloc (n=<optimized out>,

elem_size=<optimized out>) at malloc.c:3428

#6 0x00007fff338064f6 in cudnnHostCalloc(unsigned long, unsigned long) ()

from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cpp.so

#7 0x00007fff33b77d0e in RNNBackwardData<float, float, float>::init(cudnnContext*, cudnnRNNStruct*, int, PerfOptions) ()

from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cpp.so

#8 0x00007fff32901097 in cudnnStatus_t RNN_DGRAD_LaunchTemplate<float, float, float, true>(cudnnContext*, cudnnRNNStruct*, int, cudnnTensorStruct* const*, void const*, void const*, void const*, void const*, void const*, void const*, cudnnTensorStruct* const*, void*, void*, void*, void const*, void*, void*, PerfOptions, bool) ()

--Type <RET> for more, q to quit, c to continue without paging--c

from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cpp.so

#9 0x00007fff33b57b75 in cudnnRNNBackwardData () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cpp.so

#10 0x00007fff32d175a8 in at::native::_cudnn_rnn_backward_input(at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, at::Tensor const&, at::Tensor const&, std::array<bool, 3ul>) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cpp.so

#11 0x00007fff32d1acf0 in at::native::_cudnn_rnn_backward(at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, at::Tensor const&, at::Tensor const&, std::array<bool, 4ul>) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cpp.so

#12 0x00007fff9506d854 in c10::impl::wrap_kernel_functor_unboxed_<c10::impl::detail::WrapFunctionIntoFunctor_<c10::CompileTimeFunctionPointer<std::tuple<at::Tensor, at::Tensor, at::Tensor, std::vector<at::Tensor, std::allocator<at::Tensor> > > (at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul>), &c10::impl::detail::with_explicit_optional_tensors_<std::tuple<at::Tensor, at::Tensor, at::Tensor, std::vector<at::Tensor, std::allocator<at::Tensor> > > (at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul>), std::tuple<at::Tensor, at::Tensor, at::Tensor, std::vector<at::Tensor, std::allocator<at::Tensor> > > (at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, at::Tensor const&, at::Tensor const&, std::array<bool, 4ul>), c10::CompileTimeFunctionPointer<std::tuple<at::Tensor, at::Tensor, at::Tensor, std::vector<at::Tensor, std::allocator<at::Tensor> > > (at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, at::Tensor const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, at::Tensor const&, at::Tensor const&, std::array<bool, 4ul>), &at::(anonymous namespace)::(anonymous namespace)::wrapper__cudnn_rnn_backward> >::wrapper>, std::tuple<at::Tensor, at::Tensor, at::Tensor, std::vector<at::Tensor, std::allocator<at::Tensor> > >, c10::guts::typelist::typelist<at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul> > >, std::tuple<at::Tensor, at::Tensor, at::Tensor, std::vector<at::Tensor, std::allocator<at::Tensor> > > (at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul>)>::call(c10::OperatorKernel*, at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul>) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so

#13 0x00007fff834b6687 in at::_cudnn_rnn_backward(at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul>) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so

#14 0x00007fff84cc3c59 in torch::autograd::VariableType::(anonymous namespace)::_cudnn_rnn_backward(at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul>) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so

#15 0x00007fff84cc4582 in c10::impl::wrap_kernel_functor_unboxed_<c10::impl::detail::WrapFunctionIntoFunctor_<c10::CompileTimeFunctionPointer<std::tuple<at::Tensor, at::Tensor, at::Tensor, std::vector<at::Tensor, std::allocator<at::Tensor> > > (at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul>), &torch::autograd::VariableType::(anonymous namespace)::_cudnn_rnn_backward>, std::tuple<at::Tensor, at::Tensor, at::Tensor, std::vector<at::Tensor, std::allocator<at::Tensor> > >, c10::guts::typelist::typelist<at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul> > >, std::tuple<at::Tensor, at::Tensor, at::Tensor, std::vector<at::Tensor, std::allocator<at::Tensor> > > (at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul>)>::call(c10::OperatorKernel*, at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul>) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so

#16 0x00007fff834b6687 in at::_cudnn_rnn_backward(at::Tensor const&, c10::ArrayRef<at::Tensor>, long, at::Tensor const&, at::Tensor const&, c10::optional<at::Tensor> const&, at::Tensor const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, c10::optional<at::Tensor> const&, long, long, long, long, bool, double, bool, bool, c10::ArrayRef<long>, c10::optional<at::Tensor> const&, at::Tensor const&, std::array<bool, 4ul>) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so

#17 0x00007fff84c08026 in torch::autograd::generated::CudnnRnnBackward::apply(std::vector<at::Tensor, std::allocator<at::Tensor> >&&) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so

#18 0x00007fff85275771 in torch::autograd::Node::operator()(std::vector<at::Tensor, std::allocator<at::Tensor> >&&) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so

#19 0x00007fff8527157b in torch::autograd::Engine::evaluate_function(std::shared_ptr<torch::autograd::GraphTask>&, torch::autograd::Node*, torch::autograd::InputBuffer&, std::shared_ptr<torch::autograd::ReadyQueue> const&) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so

#20 0x00007fff8527219f in torch::autograd::Engine::thread_main(std::shared_ptr<torch::autograd::GraphTask> const&) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so

#21 0x00007fff85269979 in torch::autograd::Engine::thread_init(int, std::shared_ptr<torch::autograd::ReadyQueue> const&, bool) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so

#22 0x00007ffff59ed293 in torch::autograd::python::PythonEngine::thread_init(int, std::shared_ptr<torch::autograd::ReadyQueue> const&, bool) () from /home/akib/.local/lib/python3.8/site-packages/torch/lib/libtorch_python.so

#23 0x00007ffff6a04d84 in ?? () from /lib/x86_64-linux-gnu/libstdc++.so.6

#24 0x00007ffff7da6609 in start_thread (arg=<optimized out>) at pthread_create.c:477

#25 0x00007ffff7ee2293 in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

I searched for some solutions and tried reinstalling pytorch and adding gc.collect() before calling backward(). I’m adding a snippet of my code for further reference

class BLSTM(torch.nn.Module):

def __init__(self, emb_size, hidden_size, num_layers, vocab_size, cutoffs):

super(BLSTM, self).__init__()

self.hidden_size = hidden_size

self.num_layers = num_layers

self.blstm = torch.nn.LSTM(emb_size, hidden_size, num_layers, batch_first=True, bidirectional=True)

self.adasoft = torch.nn.AdaptiveLogSoftmaxWithLoss(hidden_size*2, vocab_size, cutoffs)

def forward(self, x, targets):

# Set initial states

h0 = torch.zeros(self.num_layers*2, x.size(0), self.hidden_size).to(device)

c0 = torch.zeros(self.num_layers*2, x.size(0), self.hidden_size).to(device)

# Forward propagate LSTM

embed = torch.nn.Embedding(vocab_size, emb_size).to(device)

out, _ = self.blstm(embed(x), (h0, c0)) # out: tensor of shape (batch_size, seq_length, hidden_size*2)

# Decode the hidden state of the last time step

out = self.adasoft(out[:, -1, :], targets)

return out

optimizer.zero_grad()

outputs = model(input_seq, target_seq)

outputs.loss.backward()

optimizer.step()

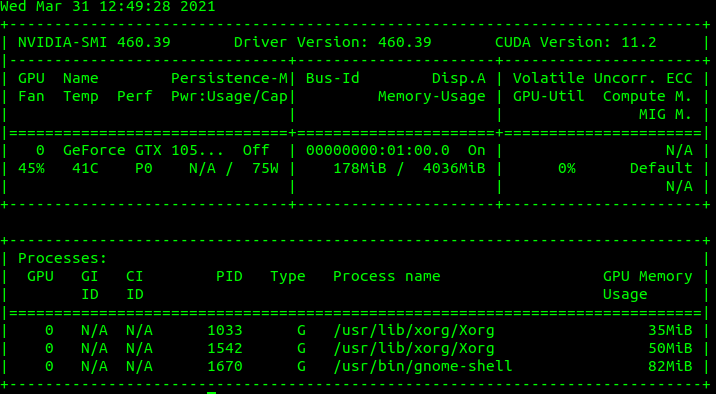

I am using CUDA11.2 in Ubuntu20.04 machine.