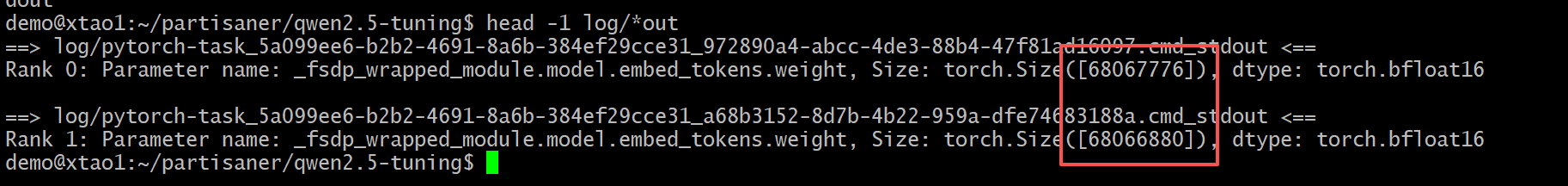

Hello , I use FSDP for SFTTrainer with 2 GPUs for Qwen2.5-0.5B tuning , then I got this …

my code is:

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

low_cpu_mem_usage=True,

)

auto_wrap_policy = functools.partial(

transformer_auto_wrap_policy,

transformer_layer_cls=(Qwen2DecoderLayer,),

)

model = FSDP(

model,

auto_wrap_policy=auto_wrap_policy,

device_id=torch.cuda.current_device(),

sharding_strategy=torch.distributed.fsdp.ShardingStrategy.FULL_SHARD, # ZeRO-3

use_orig_params=True,

)

why fsdp give different size of each shard?