When I use FSDP, I saw some weird things.

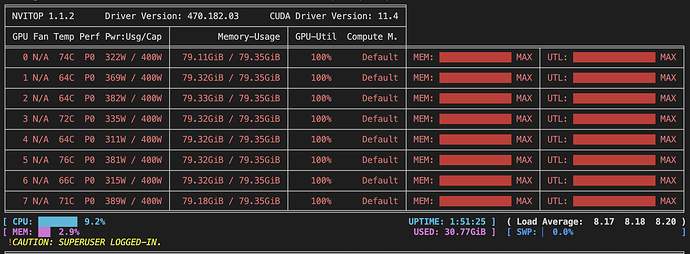

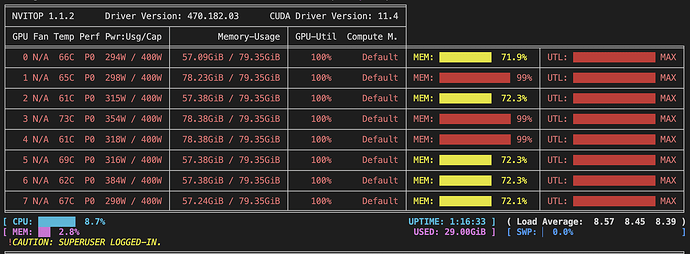

When I use FSDP with 14 batch per device, GPU memory usage fixed. But, with 16 batch, GPU memory has fluctuated.

With 16 batches, training works fine. But sometimes train failed with OOM. (No dynamic shapes are used.)

Could someone give some insight into the reasons behind the fluctuation in memory usage?