I’m working on a neural network that can expand in size from one iteration to the next according to changes in the inputs. While developing the training loop, I encountered a problem during back-propagation. I coded a minimal example that reproduces the problem:

import torch

from torch import nn

l1 = nn.Linear(2, 1)

x = torch.tensor([1.0, 3.0])

# with torch.no_grad():

y_pred = l1(x)

x = torch.tensor([1.0, 3.0, -2.0])

y = torch.tensor([2.0])

l1.weight.data = torch.cat(

[l1.weight.data, torch.randn((1, 1))],

dim=1

)

l1.in_features += 1

y_pred = l1(x)

loss = (y - y_pred)**2

loss.backward()

This gives the following error:

Traceback (most recent call last):

File "/Users/pedrocoelho/gnn-poc/bug_test.py", line 25, in <module>

loss.backward()

File "/usr/local/lib/python3.9/site-packages/torch/_tensor.py", line 255, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph, inputs=inputs)

File "/usr/local/lib/python3.9/site-packages/torch/autograd/__init__.py", line 147, in backward

Variable._execution_engine.run_backward(

RuntimeError: Function TBackward returned an invalid gradient at index 0 - got [1, 3] but expected shape compatible with [1, 2]

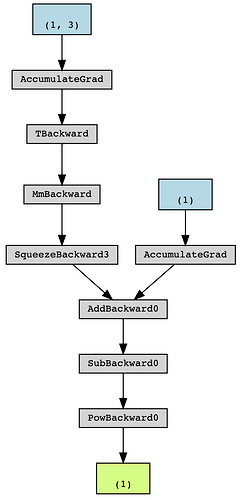

What could be causing this? I know it has to do with the incompatibility of shape of the gradients, but I find that odd since the gradients are not calculated until loss.backward() is called. It might also be related to the intermediate results being stored in the context of a forward pass. How do I access/clear those intermediate results after each forward+backward iteration? Also, what exactly is the TBackward function? I couldn’t find much information about it.

Calling the first y_pred = l1(x) inside the with torch.no_grad() fixes the problem but it isn’t an option because I need want to do a backward pass at every iteration, with different network sizes.

The computation graph for the loss looks like this: