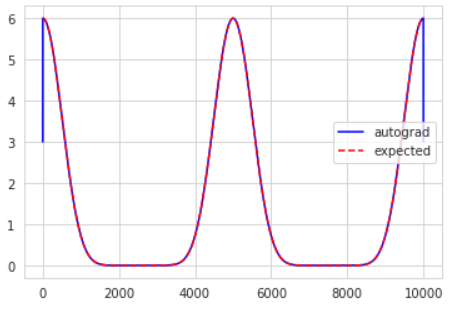

Hi, I tried using Pytorch’s autograd to calculate functional derivatives. For the case of a periodic function, a=cos^2(x), I considered an integral A=\int a^6, which should have a functional derivative of dA/da = 6a^5. The code to perform it is as follows.

a = torch.tensor(np.cos(np.linspace(0, 2*np.pi, 10000, endpoint=False))**2, requires_grad=True).float()

A = torch.trapz(a**6)

b = torch.autograd.grad(A, a)[0]

plt.plot(b, 'b', label = 'autograd')

plt.plot(6*a.detach().numpy()**5, '--r', label = 'expected')

plt.legend()

plt.show()

The expected result agrees with the autograd result for the most part, but there’s some strange behavior at the boundaries. Increasing the number of grid points for the integration doesn’t seem to make this problem go away (but I haven’t tested this extensively). After testing a few examples, I noticed that it went down to about half of the expected value at the boundaries. Does anybody have an idea of how this problem arises and how it can be fixed? Thank you!