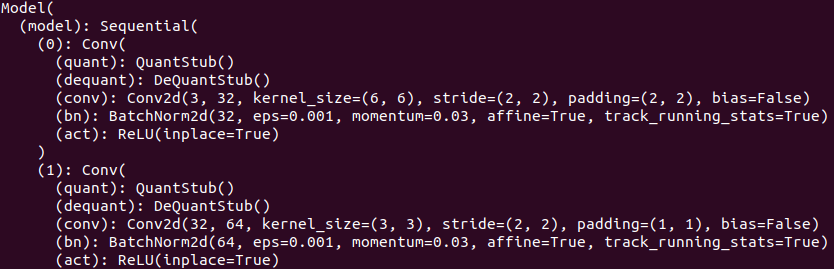

Hi, I am trying QAT for YOLOv5 and this is the 1st portion of the model

Using:

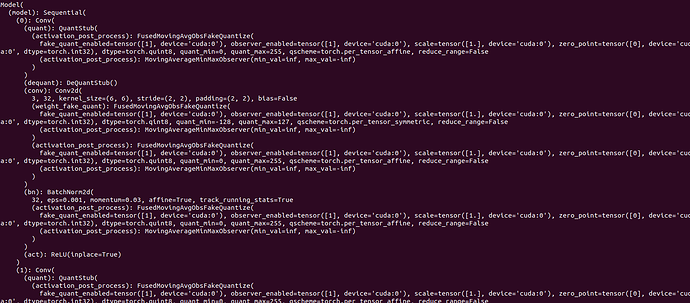

torch.quantization.fuse_modules(module, [["conv","bn","relu"]],inplace=True)the model does not seemed fused

Whereas if i use:

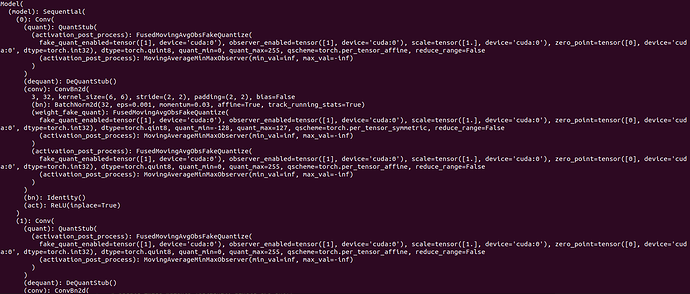

torch.quantization.fuse_modules(module, [["conv","bn"]],inplace=True)

the model is fused

Is this a bug?