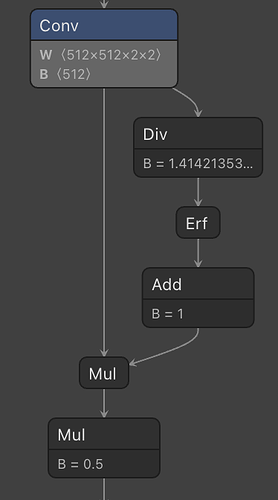

I wish to fuse these operator when export to onnx, is it possible to fuse these operator so my model inference speed can be improve?

Have you looked into torch.compile? torch.compile Tutorial — PyTorch Tutorials 1.13.1+cu117 documentation

I have tried with torch.compile, but it shows the below errors.

File /misc/home/usr16/cheesiang_leow/venv_31_11.5/lib/python3.8/site-packages/torch/_dynamo/utils.py:1068, in get_fake_value(node, tx)

1064 elif isinstance(

1065 cause, torch._subclasses.fake_tensor.DynamicOutputShapeException

1066 ):

1067 unimplemented(f"dynamic shape operator: {cause.func}")

-> 1068 raise TorchRuntimeError() from e

TorchRuntimeError:

from user code:

File "/misc/DataStorage2/cheesiang_leow/artibrains/single_line_v2/modules/feature_extraction.py", line 253, in forward

return self.ConvNet(input).permute(0, 3, 1, 2).mean(dim=(-1))

File "/misc/DataStorage2/cheesiang_leow/artibrains/single_line_v2/modules/feature_extraction.py", line 210, in forward

x = self.bn0_1(x)

Set torch._dynamo.config.verbose=True for more information

You can suppress this exception and fall back to eager by setting:

torch._dynamo.config.suppress_errors = True

Do you have a minimal reproducible example?