Descpription:

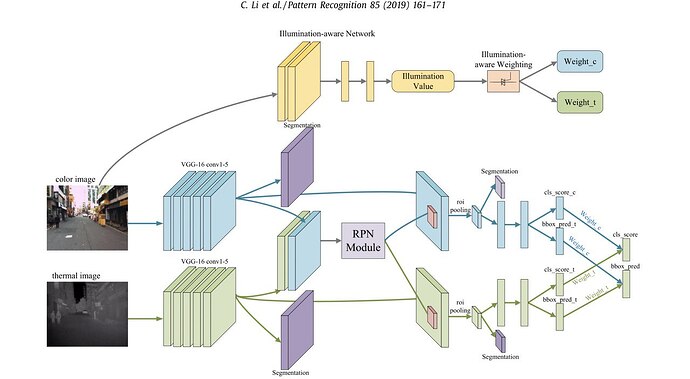

All the light blue layers concer a RGB detector that I have alredy implemented in PyTorch framework using a Mask RCNN framework with a ResNet50 CNN backbone.

All the light green layers concern a thermal detector that, again, I have alredy implemented in PyTorch framework with the same Mask RCNN framework previously mentioned.

the RGB and thermal images are pixel-to-pixel well aligned. it is possible to see the RGB-Thermal pairs as 4-channel images.

The figure represent a slightly different scenario since they have used a Faster RCNN framework with a VGG16 CNN backbone but the concept is the same since I am just interested in bbox regression task and not instance segmentation one.

Is it possible to implement in PyTorch this kind of fusion schema? The crucial point is to use just one RPN module that is able to exploit the feature map of both the detector as explained in the figure and treat these 3 different sub-networks as single one.

Then, when the 2 pseudo-independent detectors(that share the RPN module concatenating the feature map) get out, at the end, the class score and bbox prediction for each candidate region for both the color and thermal detector another gated fusion is wanted according to the illumination weight that comes from another CNN as it is possible to see from the figure.

Inputs:

RGB-thermal pixel-to-pixel alligned that come in in two different detectors, the RGB and thermal one.

Outputs:

A class score and bbox prediction pair for each candidate regions. each of these final pairs is the result of fuse each class score and bbox prediction that come out from the 2 detectors according to the weight_c and weight_t that come put from a third CNN.