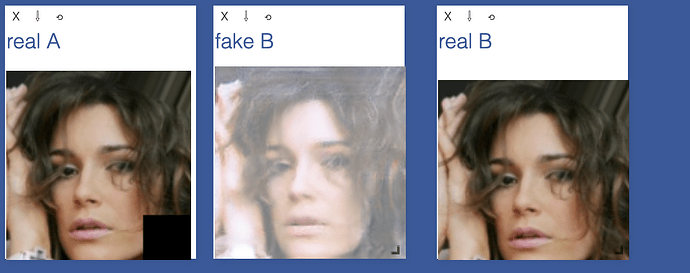

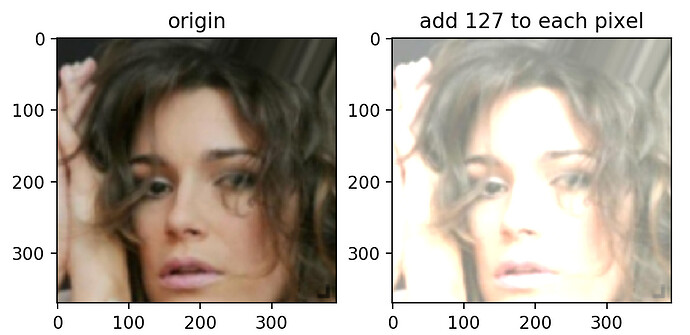

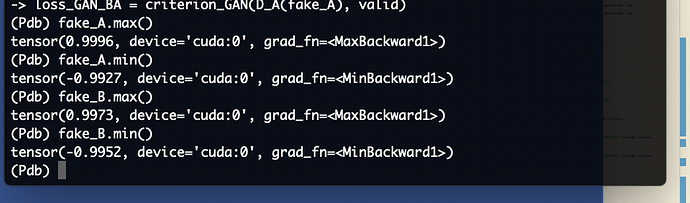

I’ve been trying to use cyclegan for image inpainting, passing in real A (which has a small black mask in the bottom right corner for the second example), getting the result fake B, and the ground truth being real B. I also generate fake A, which is what is generated by passing in Fake B into the reverse generator, and it is also too light, but didnt show it here to not complicate this post. The point is that both images synthesized by the generator seem to be a shade too light and I’ve experimented with other datasets/other tasks with cyclegan and this pattern is consistent. Anyone have any idea what could be happening?

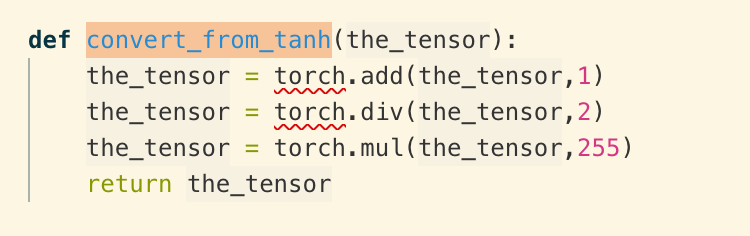

The last layer is tanh so range -1 to 1, so I add 1, divide by 2, and multiply by 255 to get it into the correct range. I also make sure to normalize the input image for my losses, and I do this by normalizing their range to -1 to 1, so that the output of the generator and the input image’s losses are correct.