i start to learn GAN, and try to write a code. but after 10000 step, it doesn’t work well.

i wanna know what goes wrong, and i think the the transform of uint to float type may be have a influence of accurate.

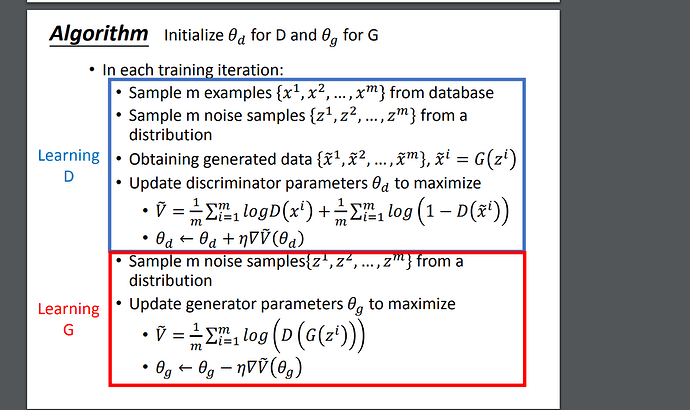

and loss function from this:

import torch

import numpy as np

import torch.nn as nn

import torchvision

import torchvision.datasets as dsets

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

batch_size = 1

LR_G = 0.00001

LR_D = 0.00001

train_dataset = dsets.MNIST(root='../../data_sets/mnist', # 选择数据的根目录

train=True, # 选择训练集

transform=transforms.ToTensor(), # 转换成tensor变量

download=False) # 不从网络上download图片

# plot one example

""" python

print("e")

print(train_dataset.train_data[0].numpy())

plt.imshow(train_dataset.train_data[0].numpy(), cmap='gray')

plt.show()

"""

def paint(output):

output = output.detach().numpy()

output = output + 1

output = output / 2

output = output * 255

output.shape = -1, 28

plt.imshow(output, cmap='gray')

plt.show()

class View(nn.Module):

def __init__(self, size):

super(View, self).__init__()

self.size = size

def forward(self, x):

x = x.view(self.size)

return x

G = nn.Sequential(

nn.Linear(100, 7 * 7 * batch_size), # 线性层

nn.ReLU(),

View((batch_size, 1, 7, 7)), # 类似于reshape

nn.Upsample(size=14), # 上采样层 使size 改变

nn.Conv2d(in_channels=1, out_channels=12, kernel_size=5, padding=2),

nn.ReLU(),

nn.Upsample(28),

nn.Conv2d(in_channels=12, out_channels=32, kernel_size=5, padding=2),

nn.ReLU(),

nn.Conv2d(in_channels=32, out_channels=1, kernel_size=5, padding=2),

nn.Tanh()

)

# input shape [1,1,28,28]

# outpout shape[1,1]

D = nn.Sequential(

nn.Conv2d(in_channels=1, out_channels=12, kernel_size=5, padding=2),

nn.ReLU(),

nn.Conv2d(in_channels=12, out_channels=32, kernel_size=5, padding=2),

nn.ReLU(),

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2),

nn.ReLU(),

nn.Conv2d(in_channels=64, out_channels=128, kernel_size=5, padding=2),

nn.ReLU(),

View((batch_size, 128 * 28 * 28)),

nn.Linear(128 * 28 * 28, 1),

nn.Sigmoid()

)

if torch.cuda.is_available():

D = D.cuda()

G = G.cuda()

opt_D = torch.optim.Adam(D.parameters(), lr=LR_D)

opt_G = torch.optim.Adam(G.parameters(), lr=LR_G)

def get(x):

x = x.unsqueeze(0)

x = x.unsqueeze(0)

x = x.float()

x = x / 255

x = x - 0.5

x = x * 2

x = x.float()

return x

for i in range(10000):

real = train_dataset.train_data[i%100]

real = get(real)

x = torch.randn(1, 100)

if torch.cuda.is_available():

real = real.cuda()

x = x.cuda()

output = G(x)

p0 = D(output)

p1 = D(real)

D_loss = - torch.mean(torch.log(p1) + torch.log(1 - p0))

opt_D.zero_grad()

D_loss.backward(retain_graph=True) # reusing computational graph

opt_D.step()

G_loss = torch.mean(torch.log(p0))

opt_G.zero_grad()

G_loss.backward()

opt_G.step()

print(i, ':', D_loss, " ", G_loss)

x = torch.randn(1, 100)

x = x.cuda()

output = G(x)

print(output)

#paint(output)