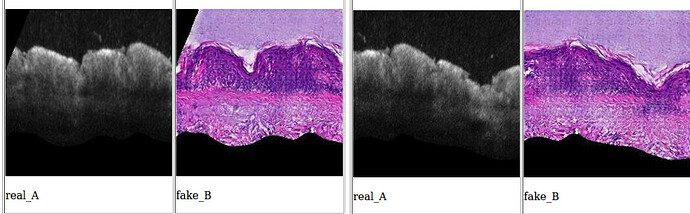

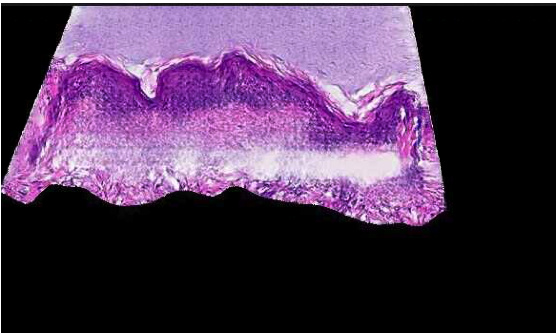

I’m running a pix2pix model trained on 256x256 images, which are crops of a larger 1024x512 image (no rescaling going on here). I then try to test my pix2pix model on the full 1024x512, which should yield identical results as the entire model is fully convolutional. However, the resultant images are quite different.

Pix2pix testing on 256x256 patches:

Pix2pix testing on full 1024x512 image:

The most noticeable difference is the large white patch in the 1024x512 image. Any ideas what is causing such an artifact?

My initial thoughts are that it could be an unusual edge effect do the black areas in the larger image. The receptive field of generator in the pix2pix model is enormous - 744x744 pixels, which is mostly wasted in the case of the 256x256 model.

The model is running batchnorm in eval model during test time - using the running mean and std. So I don’t think this is causing the problem.

Does anyone have ideas on causes of/how to address such a problem? Thanks!