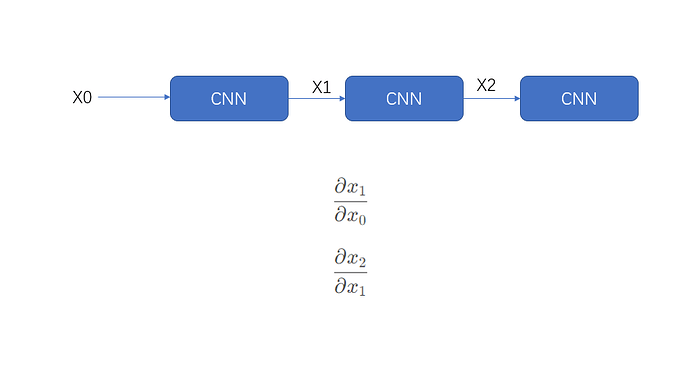

I unroll a nerual network as the picture. Then i want to have the grad value of each CNN output to its input, for example:

below is my partical code:

Compute gradient

x.requires_grad_(True)

D_x = self.denoise_operator(x)

g = x - D_x - z + u

gradient = ATWAx_b + self.rho_param * g

# Backward pass to compute ∇x D(x)

D_x.backward(torch.ones_like(D_x), retain_graph=True)

D_x_grad = x.grad.clone()

x.grad.zero_() # Clear the gradient to avoid accumulation

this can work in the 1st iteration of the unrolling NN, but x.grad is None type error in the 2nd iteration of the NN, as mentioned before, the second partial derivative can’t work.

Any one can help? Thanks