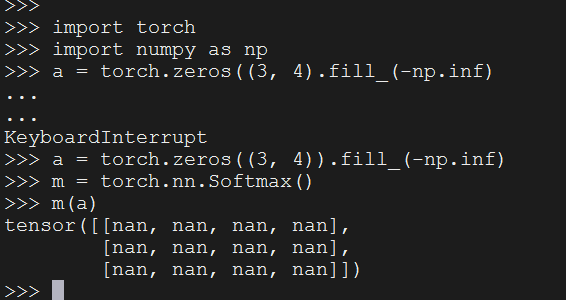

As the title suggests, I created a tensor by a = torch.zeros((3, 4)).fill_(-np.inf). Theretically, every element of a is a super small negative value, and nn.Softmax(a) should produce near zero output. However, the output is NaN.Is there something I missed or misunderstood? Any help is appreciated!

Currently, I’m training a Transformer, and the loss is NaN after the const value in the mask is set to -np.inf, but it works well with the const being -1e20

i think using torch.nn.LogSoftmax might work for you.

LogSoftmax is numerically more stable, but in your test code still return nan.

Hello,

From my understanding of Softmax, it’s quite inconsistent to have all your logits = -inf, you should have at least one logit which value is superior to -inf. Ex:

>>>

>>> a = torch.zeros(4)

>>> a[0:3] = float('-inf')

>>> a

tensor([-inf, -inf, -inf, 0.])

>>> torch.nn.functional.softmax(a)

__main__:1: UserWarning: Implicit dimension choice for softmax has been deprecated. Change the call to include dim=X as an argument.

tensor([0., 0., 0., 1.])

>>>

>>>

>>> a[0:4] = float('-inf')

>>> torch.nn.functional.softmax(a)

tensor([nan, nan, nan, nan])

Also, about your statement:

Theretically, every element of a is a super small negative value, and

nn.Softmax(a)should produce near zero output.

It is true that the probability value in the output of a softmax for a logit that tend to -inf should tend to 0. But your output probability distribution cannot all be close to 0 evrywhere, as the sum of all probabilities should sum to 1.

Thanks for your reply. Sadly it doesn’t work either. This question doesn’t bother me to write a Transformer because I set the const in the mask to -1e20 and it works pretty well. I just want to know why -np.inf fails. According to some reference codes, it seems that -np.inf once worked. I guess there’s some version change which leads to this pitfall. weird thing.