I want to see the following function calls happening for Tensor.to(device) function and how the moving of a Tensor is working from CPU to GPU or GPU to CPU. I used the following code to get an idea of function calls.

import torch

# Enable the profiler

with torch.autograd.profiler.profile() as prof:

# Your code snippet involving Tensor.to() here

tensor = torch.randn(3, 3).to(device="cuda")

tensor = tensor.to(device="cpu")

# Print the profiler results

print(prof)

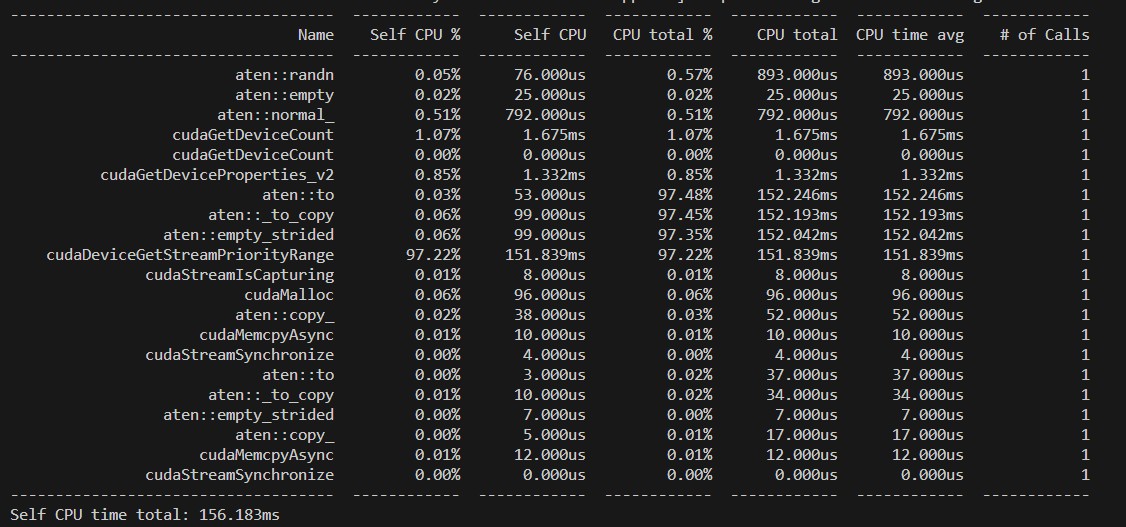

The output is

So, for the Tensor.to(device) function, aten::to, atten::to_copy, cudaDeviceGetStreamPriorityRange, cudaMalloc, aten::copy functions are getting called.

I want to track these functions in PyTorch code base. I am new to PyTorch. So, I fail to locate and navigate the PyTorch code base. I do not know if there are any other tools to get more detailed information about classes that contain the functions. Any kind of help will be appreciated. Thanks in advance.