Split the image paths into training and validation sets

train_images = final_gray_images[:92677]

train_labels = labels[1:92678]

val_images = final_gray_images[92678:115845]

val_labels = labels[92679:115846]

Print the lengths of training and validation sets

print(“shape of training images:”, train_images.shape)

print(“shape of training labels:”, train_labels.shape)

print(“shape of validation images:”, val_images.shape)

print(“shape of validation labels:”, val_labels.shape)

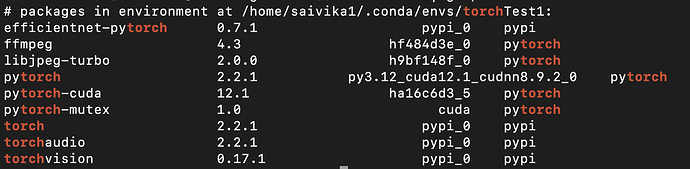

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader, TensorDataset

from sklearn.preprocessing import LabelEncoder

Checking if CUDA (GPU) is available

device = “cuda” if torch.cuda.is_available() else “cpu”

print(f"Using device: {device}")

Create a label encoder

label_encoder = LabelEncoder()

Fit the label encoder on the entire labels array

label_encoder.fit(labels)

Transform the labels to numerical labels

train_labels_encoded = label_encoder.transform(train_labels)

val_labels_encoded = label_encoder.transform(val_labels)

Convert NumPy arrays to PyTorch tensors and move to the GPU

train_tensor = torch.tensor(train_images).float().to(device)

train_labels_tensor = torch.from_numpy(train_labels_encoded).long().to(device)

val_tensor = torch.tensor(val_images).float().to(device)

val_labels_tensor = torch.from_numpy(val_labels_encoded).long().to(device)

torch.cuda.empty_cache()

Create TensorDatasets

train_dataset = TensorDataset(train_tensor, train_labels_tensor)

val_dataset = TensorDataset(val_tensor, val_labels_tensor)

Create DataLoaders

train_loader = DataLoader(train_dataset, batch_size=1000, shuffle=False)

val_loader = DataLoader(val_dataset, batch_size=1000, shuffle=False)

class CNNModel(nn.Module):

def init(self):

super(CNNModel, self).init()

self.conv1 = nn.Conv2d(1, 64, kernel_size=3, padding=1)

self.relu1 = nn.ReLU(inplace=True)

self.maxpool1 = nn.MaxPool2d(kernel_size=2, stride=2)

self.conv2 = nn.Conv2d(64, 128, kernel_size=3, padding=1)

self.relu2 = nn.ReLU(inplace=True)

self.maxpool2 = nn.MaxPool2d(kernel_size=2, stride=2)

self.conv3 = nn.Conv2d(128, 256, kernel_size=3, padding=1)

self.relu3 = nn.ReLU(inplace=True)

self.conv4 = nn.Conv2d(256, 256, kernel_size=3, padding=1)

self.relu4 = nn.ReLU(inplace=True)

self.maxpool3 = nn.MaxPool2d(kernel_size=2, stride=2)

self.conv5 = nn.Conv2d(256, 512, kernel_size=3, padding=1)

self.relu5 = nn.ReLU(inplace=True)

self.conv6 = nn.Conv2d(512, 512, kernel_size=3, padding=1)

self.relu6 = nn.ReLU(inplace=True)

self.maxpool4 = nn.MaxPool2d(kernel_size=2, stride=2)

self.avgpool = nn.AdaptiveAvgPool2d((7, 7))

self.fc = nn.Linear(512 * 7 * 7, 1000)

def forward(self, x):

x = self.conv1(x)

x = self.relu1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.relu2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.relu3(x)

x = self.conv4(x)

x = self.relu4(x)

x = self.maxpool3(x)

x = self.conv5(x)

x = self.relu5(x)

x = self.conv6(x)

x = self.relu6(x)

x = self.maxpool4(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

Move the model to the GPU if available

model = CNNModel().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

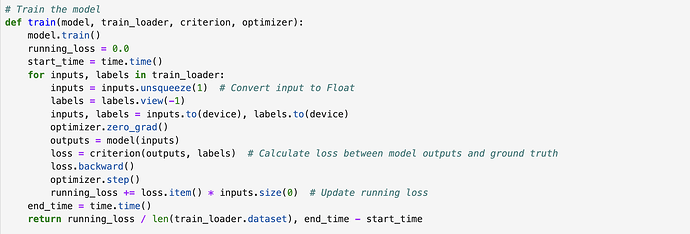

Train the model

def train(model, train_loader, criterion, optimizer):

model.train()

running_loss = 0.0

start_time = time.time()

for inputs, labels in train_loader:

inputs = inputs.unsqueeze(1) # Convert input to Float

labels = labels.view(-1)

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels) # Calculate loss between model outputs and ground truth

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.size(0) # Update running loss

end_time = time.time()

return running_loss / len(train_loader.dataset), end_time - start_time

Validation function

def validate(model, val_loader, criterion):

model.eval()

start_time = time.time()

with torch.no_grad():

running_loss = 0.0

correct_preds = 0

total_preds = 0

for inputs, labels in tqdm(val_loader):

inputs = inputs.to(device)

labels = labels.to(device)

outputs = model(inputs)

loss = criterion(outputs, labels)

running_loss += loss.item() * inputs.size(0)

# Calculate accuracy

_, preds = torch.max(outputs, 1)

correct_preds += torch.sum(preds == labels).item()

total_preds += inputs.size(0)

end_time = time.time()

val_loss = running_loss / len(val_loader.dataset)

val_accuracy = correct_preds / total_preds

return val_loss, val_accuracy, end_time - start_time

Training loop

num_epochs = 10

for epoch in range(num_epochs):

train_loss, train_time = train(model, train_loader, criterion, optimizer)

val_loss, val_accuracy, val_time = validate(model, val_loader, criterion)

print(f’Epoch {epoch+1}/{num_epochs}, Train Loss: {train_loss:.4f}, Train Time: {train_time:.2f}s, ’

f’Val Loss: {val_loss:.4f}, Val Accuracy: {val_accuracy:.4f}, Val Time: {val_time:.2f}s’)

This is the code I’m running. I’m using ImageNet-LT dataset which has 1000 classes. While training the model I’m facing the issue. Can you please help me with this.