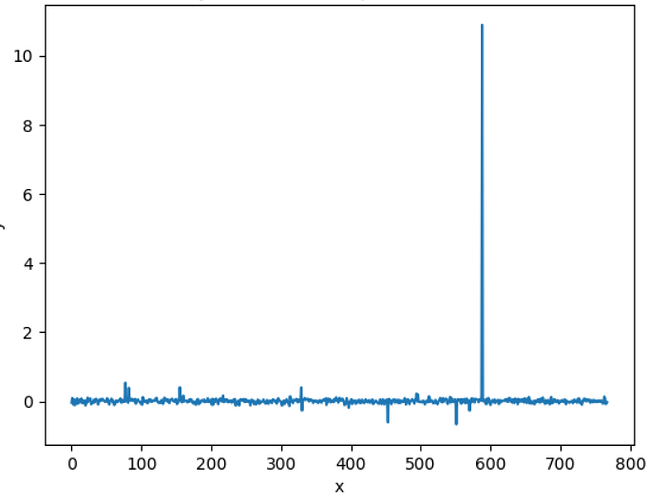

I was just playing around with some HuggingFace NLP Models Roberta/Deberta. I got the embeddings from them as the final output. But when I plotted them on a graph, I found something abnormal. All of the values were very small as expected, but the value at 588 in the case of Roberta came up to be around 10 , (in points). I tried it with different sentences, words, paragraphs, but everytime only the value at 588 seemed to be abnromal. The graph looked like this

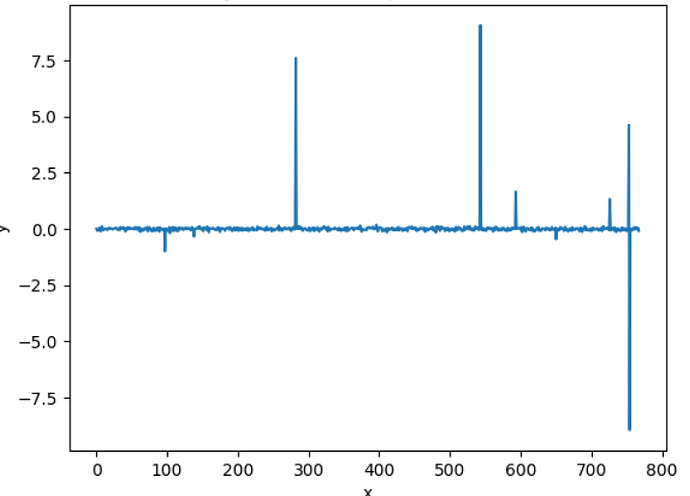

The same thing was encountered on

Deberta, but with multiple values(I could not post the graph, due to restrictions)

Where are there these abnormalities…?, Do transformers/these architechtures work like this only