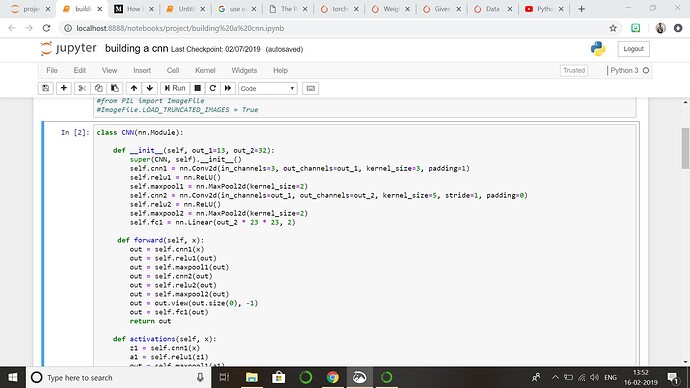

I am new to pytorch and I am trying to build a cnn model using the following code. My issue is I dont know how to calculate input and output parameters. my images are of 100x 100 and grayscale. more my dataset is of 1690 images and I want to batch them in 13 images. there two output sets pos or neg. Can someone please explain me how to give values of command between two # lines

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

############################

self.conv1 = nn.Conv2d(1, 6, 3)

self.conv2 = nn.Conv2d(6, 16, 3)

# an affine operation: y = Wx + b

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 2)

########################################

def forward(self, x):

# Max pooling over a (2, 2) window

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

# If the size is a square you can only specify a single number

x = F.max_pool2d(F.relu(self.conv2(x)), 2)

x = x.view(-1, self.num_flat_features(x))

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

def num_flat_features(self, x):

size = x.size()[1:] # all dimensions except the batch dimension

num_features = 1

for s in size:

num_features *= s

return num_features

net = Net()

print(net)

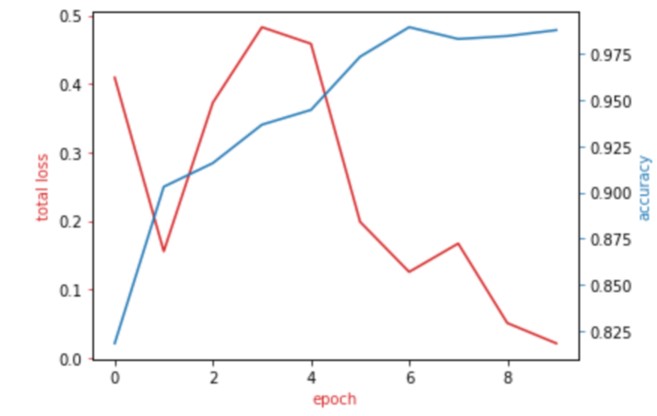

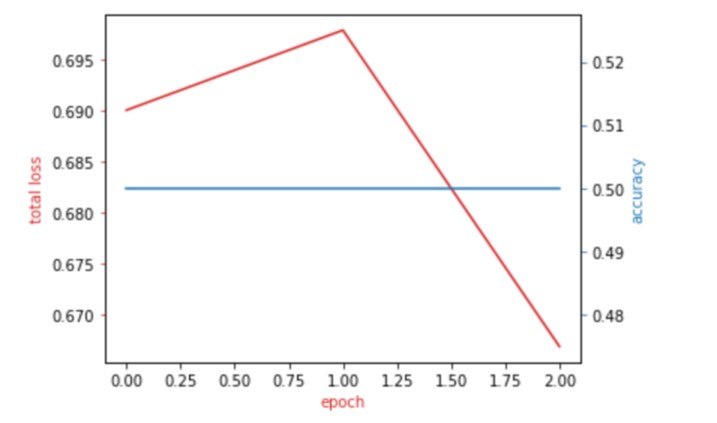

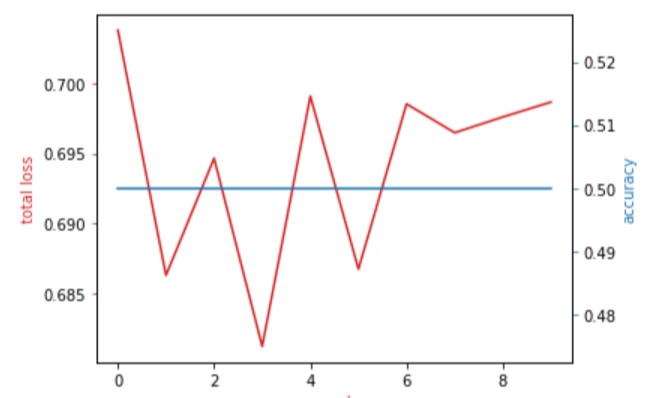

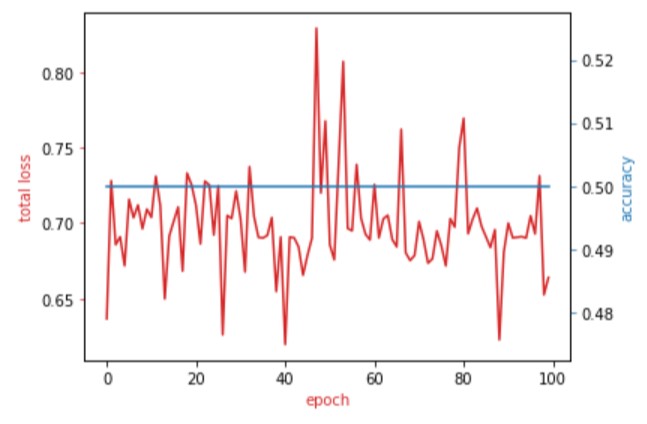

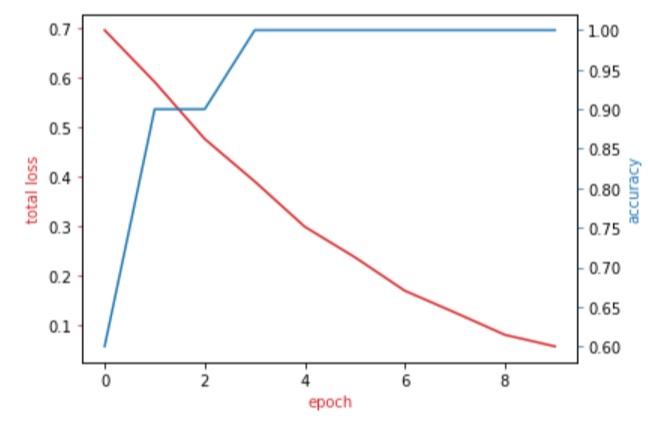

n_epochs=3

loss_list=[]

accuracy_list=[]

N_test=len(validation_dataset)

def train_model(n_epochs):

for epoch in range(n_epochs):

for x, y in train_loader:

optimizer.zero_grad()

z = net(x)

loss = criterion(z, y)

loss.backward()

optimizer.step()

correct=0

#perform a prediction on the validation data

for x_test, y_test in validation_loader:

z = net(x_test)

_, yhat = torch.max(z.data, 1)

correct += (yhat == y_test).sum().item()

accuracy = correct / N_test

accuracy_list.append(accuracy)

loss_list.append(loss.data)

train_model(n_epochs)

.

.