Hi There,

I can’t figure out what went wrong here, I have a simple convolutional network for 128 output features and pytorch lightning framework for train procedure.

The notebook I’m about to share works fine on my computer, no probelm

but Google Colab suddenly crashes and restart runtime when running cell trainer.fit(..)

Colab kernel output log is not much help, I track down the code and I this crash happens when calling backward() for pytorch training !

this means the dataloader, model and loss calculation works without any problem but when pytorch tries to backward with loss value runtime hit this problem

notebook:

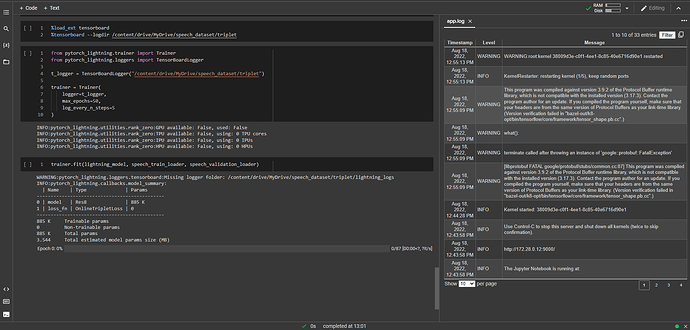

screen shot, the restart hit in last cell

Does it run with dummy inputs? Does it run without pytorch lightning?

There are many things going on here

Does it crash without throwing an exception?

Hi @JuanFMontesinos,

this notebook runs without any problem on my machine, the model and training method works with dummy inputs, I even tried get batch from dataloader and feed into model and run loss function and all worked just fine !

so, what happens is trainer runs the train method for 2 or 3 times (get batch -> feed model -> calculate loss -> pytorch lightning do backward()) and suddenly colab runtime gets restarted

no handy report, no guess, all I got is the kernel logs on the right corner of screenshot

I successfully trained this model for 2 epochs on my 8GB ram, 4 core CPU so I am not sure if I hit the hardware limitations on colab either…

It’s difficult to know,

I’d bet for OOM or out of disk (are u logging a lot)?

Are you sure you are running the train method?

P-lightning usually runs some validation iters first.

It’s strange you don’t get an exception or anything similar.

Lastly you could check the libraries. You may be using incompatible libraries. For example I realised colab’s torchaudio doesn’t have cuda enabled.

Hi @JuanFMontesinos , I really appreciate the effort you have contributed to my problem

I ended up open this issue and the version of pytorch-lightning had tensorboard conflict. suggested version fixes the problem.