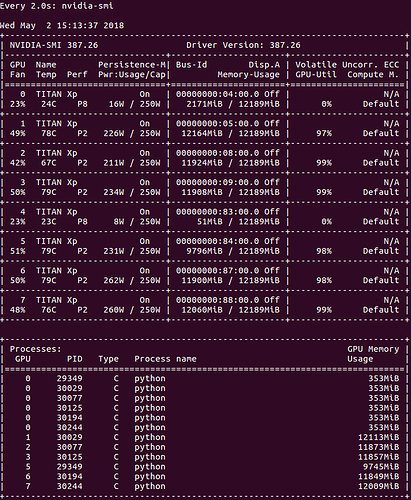

I am training different models on different GPUs simultaneously in one of my remote machines, and I found that the processes running on GPUs whose id isn’t 0 are somehow duplicated on GPU whose id is 0, as shown below:

As you can see from the PIDs, the processes running on GPU_i with i nonzero are also duplicated on GPU_0. I am running the exact same code in each of these GPUs (with different hyperparameters). This is really puzzling because in another remote machine with very similar settings, I could not reproduce this behavior even though I ran exactly the same code in each GPU in exactly the same way. In that machine, there was no duplication of processes to GPU_0, only one process in each GPU.

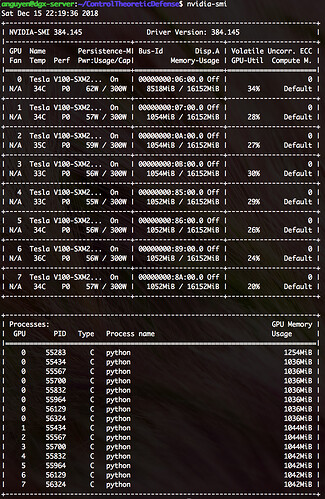

So, I concluded that this is likely not due to a bug in my code, but due to external settings like Pytorch, cuda, or driver version. The other machine I tried to reproduce this behavior has the exactly the same version of driver (387.26) and same number of the same GPU (Titan-XP), but they have somewhat different versions of pytorch and cuda. Shown below is the exact versions of the packages installed (all installed by conda) in each machine (the first one is from the machine where I had this problem, and the second from the other machine):

(The problematic machine)

certifi 2016.2.28 py36_0

cffi 1.10.0 py36_0

cudatoolkit 8.0 3 anaconda

cudnn 6.0.21 cuda8.0_0

cycler 0.10.0 py36_0

cycler 0.10.0 <pip>

dbus 1.10.20 0

decorator 4.1.2 py36_0

expat 2.1.0 0

fontconfig 2.12.1 3

freetype 2.5.5 2

glib 2.50.2 1

gst-plugins-base 1.8.0 0

gstreamer 1.8.0 0

icu 54.1 0

imageio 2.3.0 py36_0 conda-forge

intel-openmp 2018.0.0 hc7b2577_8

jbig 2.1 0

jpeg 9b 0

libffi 3.2.1 1

libgcc 5.2.0 0

libgcc-ng 7.2.0 h7cc24e2_2

libgfortran 3.0.0 1

libgfortran-ng 7.2.0 h9f7466a_2

libiconv 1.14 0

libpng 1.6.30 1

libstdcxx-ng 7.2.0 h7a57d05_2

libtiff 4.0.6 3

libxcb 1.12 1

libxml2 2.9.4 0

matplotlib 2.0.2 <pip>

matplotlib 2.0.2 np113py36_0

mkl 2018.0.1 h19d6760_4

nccl 1.3.4 cuda8.0_1

networkx 1.11 py36_0

numpy 1.13.3 py36ha12f23b_0

olefile 0.44 py36_0

openssl 1.0.2l 0

pcre 8.39 1

pillow 4.2.1 py36_0

pip 9.0.1 py36_1

pycparser 2.18 py36_0

pyparsing 2.2.0 py36_0

pyparsing 2.2.0 <pip>

pyqt 5.6.0 py36_2

python 3.6.2 0

python-dateutil 2.6.1 <pip>

python-dateutil 2.6.1 py36_0

pytorch 0.3.0 py36_cuda8.0.61_cudnn7.0.3h37a80b5_4 pytorch

pytz 2017.2 py36_0

pytz 2017.2 <pip>

pywavelets 0.5.2 np113py36_0

qt 5.6.2 5

readline 6.2 2

scikit-image 0.13.0 np113py36_0

scipy 1.0.0 py36hbf646e7_0

setuptools 36.4.0 py36_0

sip 4.18 py36_0

six 1.10.0 py36_0

sqlite 3.13.0 0

tk 8.5.18 0

torchvision 0.2.0 py36h17b6947_1 pytorch

wheel 0.29.0 py36_0

xz 5.2.3 0

zlib 1.2.11 0

(The other machine)

ca-certificates 2018.03.07 0

certifi 2018.4.16 py36_0

cffi 1.11.5 py36h9745a5d_0

cuda91 1.0 h4c16780_0 pytorch

cycler 0.10.0 py36h93f1223_0

dbus 1.13.2 h714fa37_1

expat 2.2.5 he0dffb1_0

fontconfig 2.12.6 h49f89f6_0

freetype 2.8 hab7d2ae_1

glib 2.56.1 h000015b_0

gst-plugins-base 1.14.0 hbbd80ab_1

gstreamer 1.14.0 hb453b48_1

icu 58.2 h9c2bf20_1

imageio 2.3.0 py36_0

intel-openmp 2018.0.0 8

jpeg 9b h024ee3a_2

kiwisolver 1.0.1 py36h764f252_0

libedit 3.1 heed3624_0

libffi 3.2.1 hd88cf55_4

libgcc-ng 7.2.0 hdf63c60_3

libgfortran-ng 7.2.0 hdf63c60_3

libpng 1.6.34 hb9fc6fc_0

libstdcxx-ng 7.2.0 hdf63c60_3

libtiff 4.0.9 h28f6b97_0

libxcb 1.13 h1bed415_1

libxml2 2.9.8 hf84eae3_0

matplotlib 2.2.2 py36h0e671d2_1

mkl 2018.0.2 1

mkl_fft 1.0.1 py36h3010b51_0

mkl_random 1.0.1 py36h629b387_0

ncurses 6.0 h9df7e31_2

numpy 1.14.2 py36hdbf6ddf_1

olefile 0.45.1 py36_0

openssl 1.0.2o h20670df_0

pcre 8.42 h439df22_0

pillow 5.1.0 py36h3deb7b8_0

pip 9.0.3 py36_0

pycparser 2.18 py36hf9f622e_1

pyparsing 2.2.0 py36hee85983_1

pyqt 5.9.2 py36h751905a_0

python 3.6.4 hc3d631a_3

python-dateutil 2.7.2 py36_0

pytorch 0.3.1 py36_cuda9.1.85_cudnn7.0.5_2 [cuda91] pytorch

pytz 2018.4 py36_0

qt 5.9.5 h7e424d6_0

readline 7.0 ha6073c6_4

scipy 1.0.1 py36hfc37229_0

setuptools 39.0.1 py36_0

sip 4.19.8 py36hf484d3e_0

six 1.11.0 py36h372c433_1

sqlite 3.23.1 he433501_0

tk 8.6.7 hc745277_3

torchvision 0.2.0 py36h17b6947_1 pytorch

tornado 5.0.2 py36_0

wheel 0.31.0 py36_0

xz 5.2.3 h55aa19d_2

zlib 1.2.11 ha838bed_2

As you can see, their Pytorch and cuda versions are different. Can this be the cause of this weird behavior?

(By the way, not sure if this is relevant information, but the cuda version I get from nvcc --version in both machines is V7.5.17, possibly due to remnants of the previous installations)