I am training a GAN for Mask Removal from human face .

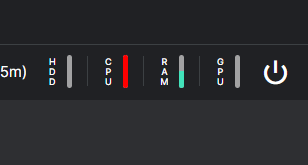

While Training , my device is coming as ‘cuda’ , my model and data are all specified to ‘cuda’ ,

but while training , all my training is happening only in ‘cpu’ and no gpu is remaining unutilised

Even while training , i checked my tensor device , which is cuda.

This is running perfectly in cpu , and not gpu even when the device is ‘cuda’

‘’’

class RemoveMaskDataset(Dataset):

def __init__(self , base_dir):

super(RemoveMaskDataset , self).__init__()

self.base_dir = base_dir

self.with_mask_dir_path = os.path.join(self.base_dir , 'with_mask')

self.without_mask_dir_path = os.path.join(self.base_dir , 'without_mask')

self.masked_images_names = os.listdir(self.with_mask_dir_path)

self.without_mask_images_names = os.listdir(self.without_mask_dir_path)

self.masked_images_paths = [os.path.join(self.with_mask_dir_path , name) for name in self.masked_images_names]

self.without_masked_images_paths = [os.path.join(self.without_mask_dir_path , name) for name in self.without_mask_images_names]

self.transform = transforms.Compose([

ToTensor() ,

Resize((64, 64) , antialias=True),

])

def __len__(self):

return len(self.masked_images_names)

def __getitem__(self , idx):

masked_img_path = self.masked_images_paths[idx]

without_mask_img_path = self.without_masked_images_paths[idx]

mask_img = cv2.imread(masked_img_path)

without_mask = cv2.imread(without_mask_img_path)

mask_img_rgb = cv2.cvtColor(mask_img, cv2.COLOR_BGR2RGB)

without_mask_rgb = cv2.cvtColor(without_mask , cv2.COLOR_BGR2RGB)

return self.transform(mask_img_rgb) , self.transform(without_mask_rgb)

class Generator(nn.Module):

def __init__(self , latent_dim):

super(Generator , self).__init__()

self.latent_dim = latent_dim

self.convtr1 = nn.ConvTranspose2d(self.latent_dim , 512 , 4 , 1 , 0 , bias = False)

self.batchnorm1 = nn.BatchNorm2d(512)

self.relu1 = nn.ReLU()

self.convtr2 = nn.ConvTranspose2d(512 , 256 , 4 , 2 , 1 , bias = False)

self.batchnorm2 = nn.BatchNorm2d(256)

self.relu2 = nn.ReLU()

self.convtr3 = nn.ConvTranspose2d(256 , 128 , 4 , 2 , 1 , bias = False)

self.batchnorm3 = nn.BatchNorm2d(128)

self.relu3 = nn.ReLU()

self.convtr4 = nn.ConvTranspose2d(128 , 64 , 4 , 2 , 1 , bias = False)

self.batchnorm4 = nn.BatchNorm2d(64)

self.relu4 = nn.ReLU()

self.convtr5 = nn.ConvTranspose2d(64 , 3 , 4 , 2 , 1 , bias = False)

def forward(self , input):

x = self.relu1(self.batchnorm1(self.convtr1(input)))

x = self.relu2(self.batchnorm2(self.convtr2(x)))

x = self.relu3(self.batchnorm3(self.convtr3(x)))

x = self.relu4(self.batchnorm4(self.convtr4(x)))

x = self.convtr5(x)

return x

class Discriminator(nn.Module):

def __init__(self):

super(Discriminator , self).__init__()

self.conv1 = nn.Conv2d(3 , 64 , 4 , 2 , 1 , bias = False)

self.act1 = nn.LeakyReLU()

self.conv2 = nn.Conv2d(64 , 128 , 4 , 2 , 1 , bias = False)

self.bnrm2 = nn.BatchNorm2d(128)

self.act2 = nn.LeakyReLU(128)

self.conv3 = nn.Conv2d(128 , 256 , 4 , 2 , 1 , bias = False)

self.bnrm3 = nn.BatchNorm2d(256)

self.act3 = nn.LeakyReLU(256)

self.conv4 = nn.Conv2d(256 , 512 , 4 , 2, 1 , bias = False)

self.bnrm4 = nn.BatchNorm2d(512)

self.act4 = nn.LeakyReLU()

self.final_conv = nn.Conv2d(512 , 1 , 4 , 1, 0 , bias = False)

self.sigmoid = nn.Sigmoid()

def forward(self , input):

x = self.act1(self.conv1(input))

x = self.act2(self.bnrm2(self.conv2(x)))

x = self.act3(self.bnrm3(self.conv3(x)))

x = self.act4(self.bnrm4(self.conv4(x)))

x = self.final_conv(x)

x = self.sigmoid(x)

return x

D_loss_plot, G_loss_plot = ,

for epoch in tqdm(range(1, num_epochs + 1)):

D_loss_list, G_loss_list = ,

for index, (input_images, output_images) in enumerate(dataloader):

# Discriminator training

discriminator_optimizer.zero_grad()

input_images, output_images = input_images.to(device), output_images.to(device)

print(input_images.device)

print(output_images.device)

real_target = Variable(torch.ones(input_images.size(0)).to(device)).unsqueeze(1)

output_target = Variable(torch.zeros(output_images.size(0)).to(device)).unsqueeze(1)

D_real_loss = discriminator_loss(discriminator(input_images).view(-1), real_target.view(-1))

D_real_loss.backward()

noise_vector = torch.randn(input_images.size(0), latent_dim, 1, 1, device=device)

noise_vector = noise_vector.to(device)

generated_image = generator(noise_vector)

output = discriminator(generated_image.detach())

D_fake_loss = discriminator_loss(output.view(-1), output_target.view(-1))

D_fake_loss.backward()

D_total_loss = D_real_loss + D_fake_loss

D_loss_list.append(D_total_loss)

discriminator_optimizer.step()

# Generator training

generator_optimizer.zero_grad()

G_loss = generator_loss(discriminator(generated_image).view(-1), real_target.view(-1))

G_loss_list.append(G_loss)

G_loss.backward()

generator_optimizer.step()

# Print and save results

print('Epoch: [%d/%d]: D_loss: %.3f, G_loss: %.3f' % (

epoch, num_epochs, torch.mean(torch.FloatTensor(D_loss_list)),

torch.mean(torch.FloatTensor(G_loss_list))))

D_loss_plot.append(torch.mean(torch.FloatTensor(D_loss_list)))

G_loss_plot.append(torch.mean(torch.FloatTensor(G_loss_list)))

torch.save(generator.state_dict(), f'./{save_dir}/generator_epoch_{epoch}.pth')

torch.save(discriminator.state_dict(), f'./{save_dir}/discriminator_epoch_{epoch}.pth')

‘’’

What should i do to fix this solution.