Hi,

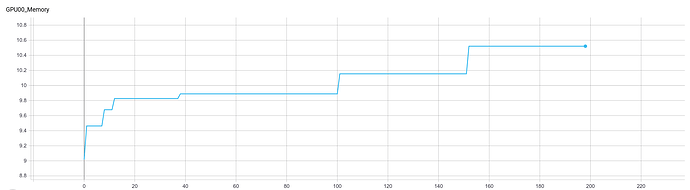

I would like to ask some little advices for beginners debugging gpu usage. For instance, my network has this behaviour:

Overtime the gpu memory usage is increased a little bit, the memory usage was optained using the torch method torch.cuda.max_memory_allocated at the end of each epoch. I would like to know which are the recommended procedure to find memory leaks and so on in pytorch. I was thinking to use the garbage collector, but I am not pretty sure if it is the best way to tackle this problem. There is any tool that helps to solve this task?

Thanks!!