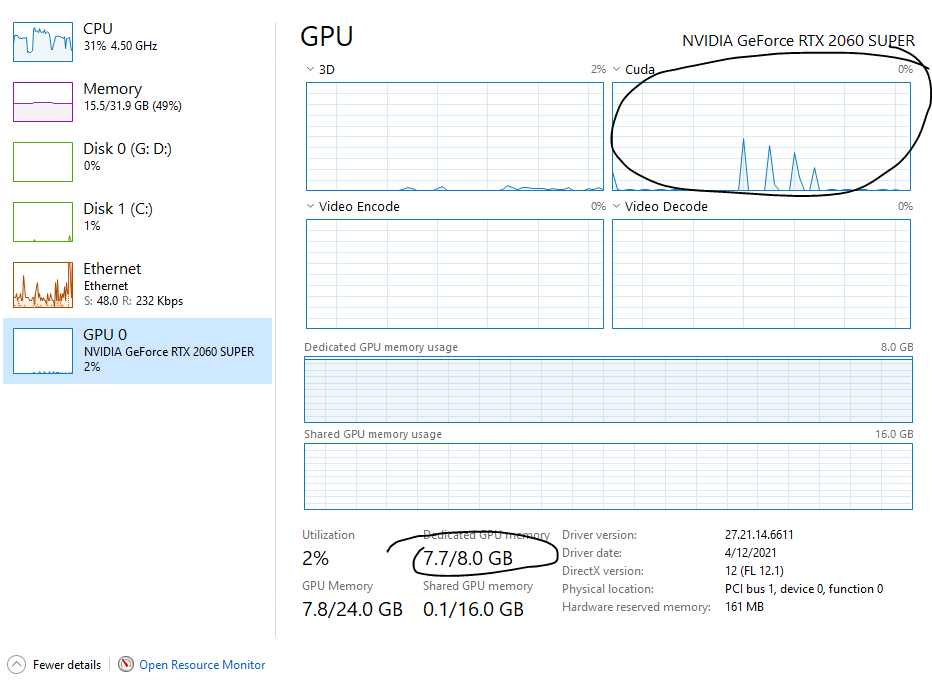

May I know why pytorch always need to stall the GPU memory? Or is there any way to release it?

PyTorch uses a caching mechanism to be able to reuse already allocated memory.

You can check the reserved and allocated memory e.g. via print(torch.cuda.memory_summary()) to see how much memory is currently allocated and in the cache.

To release the memory from the cache (and to reallocate it with a synchronizing cudaMalloc) you could use torch.cuda.empty_cache() so that other processes can use it.