Hi, I’m new to CV, here is my code:

# !/usr/bin/python

# -*- coding: utf-8 -*-

import cv2

import matplotlib.pyplot as plt

from os.path import isfile

import torch.nn.init as init

import torch

import torch.nn as nn

import numpy as np

import pandas as pd

import os

from PIL import Image, ImageFilter

from sklearn.model_selection import train_test_split, StratifiedKFold, KFold

from torch.utils.data import Dataset

from torchvision import transforms

from torch.optim import Adam, SGD, RMSprop, lr_scheduler

import time

from torch.autograd import Variable

import torch.functional as F

from tqdm import tqdm

from sklearn import metrics

import urllib

import pickle

import torch.nn.functional as F

from torchvision import models

import scipy as sp

from functools import partial

import random

import sys

from efficientnet_pytorch import EfficientNet

try:

from apex.parallel import DistributedDataParallel as DDP

from apex.fp16_utils import *

from apex import amp, optimizers

from apex.multi_tensor_apply import multi_tensor_applier

except ImportError:

raise ImportError("Please install apex from https://www.github.com/nvidia/apex to run this example.")

def seed_everything(seed):

random.seed(seed)

os.environ['PYTHONHASHSEED'] = str(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.backends.cudnn.deterministic = True

SEED = 1234

TTA = 5

num_classes = 1

IMG_SIZE = 256

DEBUG = True

n_epochs = 100

es = 3

AMP = 'O2'

device = torch.device("cuda")

seed_everything(SEED)

class OptimizedRounder(object):

def __init__(self):

self.coef_ = 0

def _kappa_loss(self, coef, X, y):

X_p = np.copy(X)

for i, pred in enumerate(X_p):

if pred < coef[0]:

X_p[i] = 0

elif pred >= coef[0] and pred < coef[1]:

X_p[i] = 1

elif pred >= coef[1] and pred < coef[2]:

X_p[i] = 2

elif pred >= coef[2] and pred < coef[3]:

X_p[i] = 3

else:

X_p[i] = 4

ll = metrics.cohen_kappa_score(y, X_p, weights='quadratic')

return -ll

def fit(self, X, y):

loss_partial = partial(self._kappa_loss, X=X, y=y)

initial_coef = [0.5, 1.5, 2.5, 3.5]

self.coef_ = sp.optimize.minimize(loss_partial, initial_coef, method='nelder-mead')

print(-loss_partial(self.coef_['x']))

def predict(self, X, coef):

X_p = np.copy(X)

for i, pred in enumerate(X_p):

if pred < coef[0]:

X_p[i] = 0

elif pred >= coef[0] and pred < coef[1]:

X_p[i] = 1

elif pred >= coef[1] and pred < coef[2]:

X_p[i] = 2

elif pred >= coef[2] and pred < coef[3]:

X_p[i] = 3

else:

X_p[i] = 4

return X_p

def coefficients(self):

return self.coef_['x']

def score(valid_predictions, test_predictions, targets):

optR = OptimizedRounder()

optR.fit(valid_predictions, targets)

coefficients = optR.coefficients()

valid_predictions = optR.predict(valid_predictions, coefficients)

test_predictions = optR.predict(test_predictions, coefficients)

cv_socre = metrics.cohen_kappa_score(targets, valid_predictions, weights='quadratic')

return valid_predictions, test_predictions, cv_socre

def expand_path(p):

p = str(p)

# print(train + p + ".png")

if isfile(train + p + ".png"):

return train + (p + ".png")

# if isfile(train_2015 + p + '.png'):

# return train_2015 + (p + ".png")

if isfile(test + p + ".png"):

return test + (p + ".png")

return p

def crop_image1(img, tol=7):

# img is image data

# tol is tolerance

mask = img > tol

return img[np.ix_(mask.any(1), mask.any(0))]

def crop_image_from_gray(img, tol=7):

if img.ndim == 2:

mask = img > tol

return img[np.ix_(mask.any(1), mask.any(0))]

elif img.ndim == 3:

gray_img = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

mask = gray_img > tol

check_shape = img[:, :, 0][np.ix_(mask.any(1), mask.any(0))].shape[0]

if check_shape == 0: # image is too dark so that we crop out everything,

return img # return original image

else:

img1 = img[:, :, 0][np.ix_(mask.any(1), mask.any(0))]

img2 = img[:, :, 1][np.ix_(mask.any(1), mask.any(0))]

img3 = img[:, :, 2][np.ix_(mask.any(1), mask.any(0))]

# print(img1.shape,img2.shape,img3.shape)

img = np.stack([img1, img2, img3], axis=-1)

# print(img.shape)

return img

class MyDataset(Dataset):

def __init__(self, dataframe, transform=None):

self.df = dataframe

self.transform = transform

def __len__(self):

return len(self.df)

def __getitem__(self, idx):

label = self.df.diagnosis.values[idx]

label = np.expand_dims(label, -1)

p = self.df.id_code.values[idx]

p_path = expand_path(p)

image = cv2.imread(p_path)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

image = crop_image_from_gray(image)

image = cv2.resize(image, (IMG_SIZE, IMG_SIZE))

image = cv2.addWeighted(image, 4, cv2.GaussianBlur(image, (0, 0), 30), -4, 128)

image = transforms.ToPILImage()(image)

if self.transform:

image = self.transform(image)

return image, label

def train_model(data_loader):

model.train()

avg_loss = 0.

optimizer.zero_grad()

for idx, (imgs, labels) in enumerate(data_loader):

imgs_train, labels_train = imgs.cuda(), labels.float().cuda()

output_train = model(imgs_train)

loss = criterion(output_train, labels_train)

with amp.scale_loss(loss, optimizer) as scaled_loss:

scaled_loss.backward()

optimizer.step()

optimizer.zero_grad()

avg_loss += loss.item() / len(data_loader)

return avg_loss

def val_model(data_loader):

avg_val_loss = 0.

model.eval()

with torch.no_grad():

for idx, (imgs, labels) in enumerate(data_loader):

imgs_vaild, labels_vaild = imgs.cuda(), labels.float().cuda()

output_test = model(imgs_vaild)

avg_val_loss += criterion(output_test, labels_vaild).item() / len(data_loader)

return avg_val_loss, output_test

def test_model(data_loader):

test_pred = np.zeros((len(data_loader), 1))

model.eval()

for _ in range(TTA):

with torch.no_grad():

for i, data in tqdm(enumerate(data_loader)):

images, _ = data

images = images.cuda()

pred = model(images)

test_pred[i * data_loader.batch_size:(i + 1) * data_loader.batch_size] += pred.detach().cpu().squeeze().numpy().reshape(-1, 1)

output = test_pred / TTA

return output

train_transform = transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.RandomRotation((-120, 120)),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])

train = '../input/train_images/'

test = '../input/test_images/'

train_csv = pd.read_csv('../input/train.csv')

test_csv = pd.read_csv('../input/test.csv')

test_transform = transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.RandomRotation((-120, 120)),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])

testset = MyDataset(test_csv,

transform=test_transform)

test_loader = torch.utils.data.DataLoader(testset, batch_size=64, shuffle=False)

folds = StratifiedKFold(n_splits=5, random_state=SEED)

y_valid_pred = np.zeros((train_csv.shape[0], 1))

y_test_pred = np.zeros((test_csv.shape[0], 1))

for n_fold, (trn_idx, val_idx) in enumerate(folds.split(train_csv, train_csv.diagnosis)):

print('fold {}:'.format(n_fold))

train_df, valid_df = train_csv.iloc[trn_idx], train_csv.iloc[val_idx]

if DEBUG:

train_df = train_df[:40]

valid_df = valid_df[:40]

# train_df, val_df = train_test_split(train_csv, test_size=0.1, random_state=2018, stratify=train_csv.diagnosis)

# train_df.reset_index(drop=True, inplace=True)

# val_df.reset_index(drop=True, inplace=True)

trainset = MyDataset(train_df, transform=train_transform)

train_loader = torch.utils.data.DataLoader(trainset, batch_size=32, shuffle=True, num_workers=4)

valset = MyDataset(valid_df, transform=train_transform)

val_loader = torch.utils.data.DataLoader(valset, batch_size=32, shuffle=False, num_workers=4)

model = EfficientNet.from_name('efficientnet-b5')

model.load_state_dict(torch.load('../../download/efficientnet-b5-586e6cc6.pth'))

in_features = model._fc.in_features

model._fc = nn.Linear(in_features, num_classes)

model.cuda()

optimizer = torch.optim.Adam(model.parameters(), lr=1e-3, weight_decay=1e-5)

criterion = nn.MSELoss()

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=5, gamma=0.1)

model, optimizer = amp.initialize(model, optimizer, opt_level=AMP, verbosity=0)

best_avg_loss = 100.0

no_improve_step = 1

for epoch in range(n_epochs):

print('lr:', scheduler.get_lr()[0])

start_time = time.time()

avg_loss = train_model(train_loader)

avg_val_loss, fold_pred = val_model(val_loader)

elapsed_time = time.time() - start_time

print('Epoch {}/{} \t loss={:.4f} \t val_loss={:.4f} \t time={:.2f}s'.format(

epoch + 1, n_epochs, avg_loss, avg_val_loss, elapsed_time))

scheduler.step()

if avg_val_loss < best_avg_loss:

best_avg_loss = avg_val_loss

torch.save(model.state_dict(), 'efficientnet_weight_best_fold_{}.pt'.format(n_fold))

no_improve_step = 1

else:

no_improve_step += 1

if avg_val_loss >= best_avg_loss and no_improve_step >= es:

print('early stopping after {} epoch no improvement'.format(es))

print('best dev loss: {}'.format(best_avg_loss))

y_valid_pred[val_idx] = fold_pred.cpu()

y_test_pred += test_model(test_loader) / folds.n_splits

break

y_valid_pred_sub, y_test_pred_sub, cv = score(y_valid_pred, y_test_pred, train_csv.diagnosis)

train_csv['reg_pred'] = y_valid_pred

train_csv['diagnosis'] = y_valid_pred_sub.astype(int)

train_csv[['id_code', 'reg_pred']].to_csv('efficientnet_5fold_{}_oof_reg.csv'.format(cv), index=False)

train_csv[['id_code', 'diagnosis']].to_csv('efficientnet_5fold_{}_oof.csv'.format(cv), index=False)

sub = pd.read_csv('../input/submission.csv')

sub['reg_pred'] = y_test_pred

sub['diagnosis'] = y_test_pred_sub.astype(int)

sub[['id_code', 'reg_pred']].to_csv('efficientnet_5fold_{}_sub_reg.csv'.format(cv), index=False)

sub[['id_code', 'diagnosis']].to_csv('efficientnet_5fold_{}_sub.csv'.format(cv), index=False)

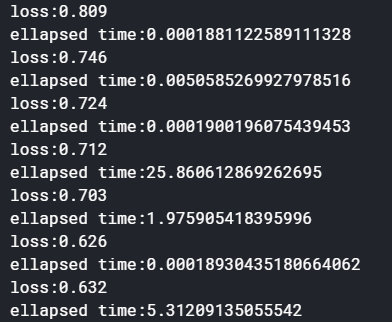

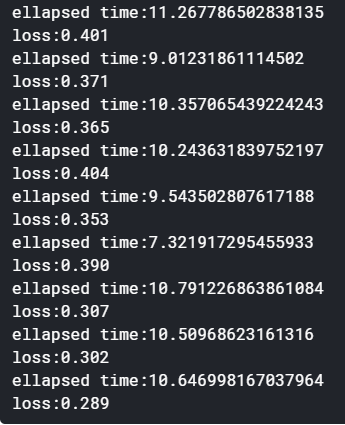

The problem I encountered was:

GPU memory is normally occupied during training, but GPU-util has always been 0 and and the usage of CPU is very high.

It looks like I’m using the memory of the gpu, but I’m training with the cpu. What is the reason for this?