Thank you for responding ptrblck.

I’ve tried using random tensor inputs and the gpu process is utilized 100%.

#testing if gpu process is working

a = torch.rand(20000,20000).cuda()

end = time.time()

while True:

print(time.time())

a += 1

a -= 1

end = time.time()

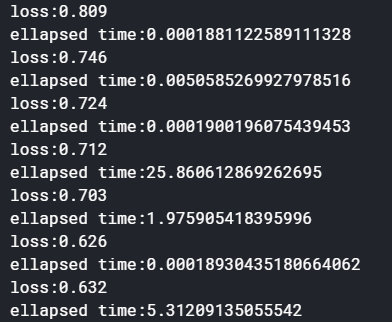

However, while profiling the data loading time, I ended up getting erratic elapsed time.

Results for the actual data loader: