Hi everyone!

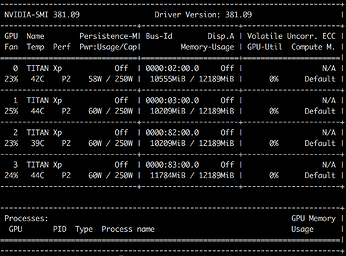

I was working jupyter notebook and interrupted the process due to some problem in my model. Even though there seem to be no process running, the memory is not freeing itself. I tried to use nvidia-smi --gpu-reset, but it throws the following error:

Unable to reset this GPU because it’s being used by some other process (e.g. CUDA application, graphics application like X server, monitoring application like other instance of nvidia-smi). Please first kill all processes using this GPU and all compute applications running in the system (even when they are running on other GPUs) and then try to reset the GPU again.

Terminating early due to previous errors.

I was wondering what is the correct way to free the memory? Thanks!!