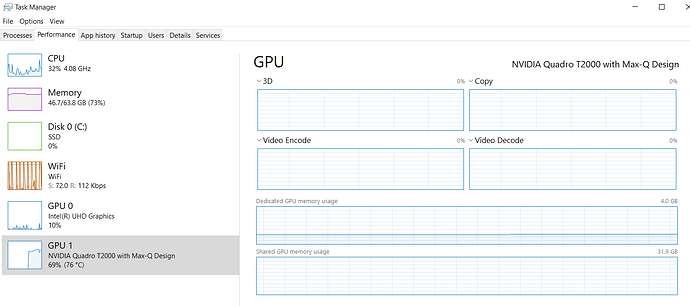

Thank you so much again for your advice which was very helpful. IAfter Minst data set, I tried to run a Deep Learning model consist of 70 leyers of LSTM each of with 100 hidden size and the NVIDIA GPU contributed for 70% of computaion in less than a minute!. Here is the plot:

I have a Pyro model in which i use probablistic terms for LSTM blockes, when I do the same process to transfer the model, parameter and data into deveice, the python give bellow error:

RuntimeError: Input and parameter tensors are not at the same device, found input tensor at cuda:0 and parameter tensor at cpu

however I have the same model just transfer to pyro for Bayesian Deep Learning. this my Pyro model:

class Model(PyroModule):

def init(self, input_size=1, num_classes=1, hidden_size=3, num_layers=1, prior_scale=50.0):

super(Model,self).init()

self.num_classes = num_classes

self.num_layers = num_layers

self.input_size = input_size

self.hidden_size = hidden_size

self.activation = nn.ReLU() # or nn.ReLU()

# Correctly initialize the LSTM layer with bidirectional=False

self.lstm = PyroModule[nn.LSTM](input_size, hidden_size, num_layers, batch_first=True, bidirectional=False)

self.linear = PyroModule[nn.Linear](hidden_size, 128) # Adjusted for unidirectional LSTM

self.fc = PyroModule[nn.Linear](128, num_classes)

# Initialize weights and biases for each layer

# Input to hidden layer

self.lstm.weight_ih_l0 = PyroSample(dist.Normal(0., prior_scale).expand([4*hidden_size, input_size]).to_event(2))

self.lstm.bias_ih_l0 = PyroSample(dist.Normal(0., prior_scale).expand([4*hidden_size]).to_event(1))

# Hidden to hidden layer

self.lstm.weight_hh_l0 = PyroSample(dist.Normal(0., prior_scale).expand([4*hidden_size, hidden_size]).to_event(2))

self.lstm.bias_hh_l0= PyroSample(dist.Normal(0., prior_scale).expand([4*hidden_size]).to_event(1))

self.linear.weight = PyroSample(dist.Normal(0., prior_scale).expand([128,hidden_size]).to_event(2))

self.linear.bias = PyroSample(dist.Normal(0., prior_scale).expand([128]).to_event(1))

self.fc.weight = PyroSample(dist.Normal(0., prior_scale).expand([num_classes, 128]).to_event(2))

self.fc.bias = PyroSample(dist.Normal(0., prior_scale).expand([num_classes]).to_event(1))

def forward(self, x, y=None,noise_shape = 0.5):

# Initialize hidden state and cell state

h_0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device) #hidden state

c_0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device) #internal state

output, (hn, cn) = self.lstm(x, (h_0, c_0)) #lstm with input, hidden, and internal state

hn = hn.view(-1, self.hidden_size) #reshaping the data for Dense layer next

out = self.activation(hn)

out = self.linear(out)

out = self.activation(out)

mu = self.fc(out)

sigma = pyro.sample("sigma", dist.Gamma(noise_shape, 1)) # infer the response noise

with pyro.plate("data", x.shape[0]):

obs = pyro.sample("obs", dist.Normal(mu, sigma * sigma), obs=y)

return mu

and this my setup for GPU running:

Check for GPU availability

use_cuda = torch.cuda.is_available()

device = torch.device(“cuda” if use_cuda else “cpu”)

Initialize the Bayesian LSTM model

input_size = X_test_tensors_final.shape[2] # Number of features

hidden_size = 2 # Number of features in hidden state

num_layers = 1 # Number of stacked LSTM layers

model = Model(input_size=input_size, num_classes=1, hidden_size=hidden_size,

num_layers=num_layers, prior_scale=50.0).to(device)

Set up the MCMC sampler

nuts_kernel = NUTS(model)

mcmc = MCMC(nuts_kernel, num_samples=50, warmup_steps=50)

Create a combined dataset

dataset = TensorDataset(X_train_tensors_final, y_train_tensors)

Set the batch size

batch_size = 400

Create a DataLoader

data_loader = DataLoader(dataset, batch_size=batch_size, shuffle=False)

Initialize an empty list to store MCMC results

all_mcmc_results =

Iterate over the batches

for batch_idx, (X_batch, y_batch) in enumerate(data_loader):

# Move data to GPU if available

X_batch = X_batch.to(device)

y_batch = y_batch.to(device)

# Run your MCMC simulation

mcmc_result = mcmc.run(X_batch, y_batch)

# Append the result to the list

all_mcmc_results.append(mcmc_result)