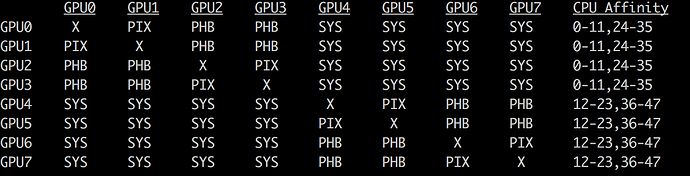

I have a 8-GPU server, however the performance of training using torchvision has no advantage over 4-GPU server.

I checked the p2pbandwidth and topology of the gpus. I thought the bandwidth between first 4-gpus and second 4-gpus is quite low. Is it the problem?