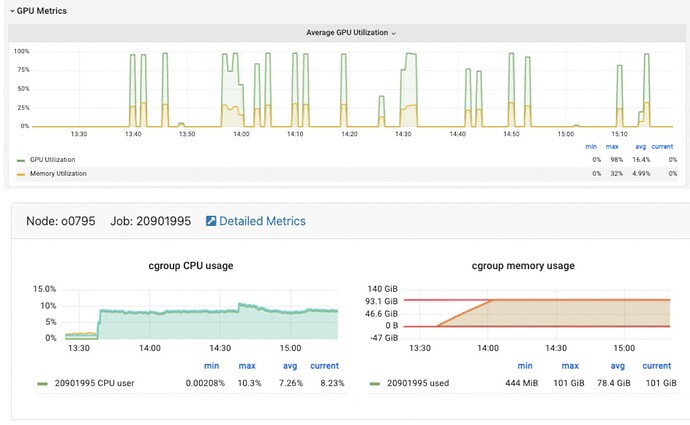

Hi all, I am very new to this, and sorry if I asked a stupid question. I used a model imported from GitHub to train on my own data. The total input is ~300GB and loaded with ‘’‘torchvision.datasets.ImageFolder’‘’. I notice that the CPU memory keeps building up but the GPU utilization is very low, please see the metric below. Is this because my input data is too large to be loaded into CPU? Is there any suggestion to improve the training speed (GPU utilization)? Thanks a lot!

ImageFolder will lazily load the samples and won’t preload the entire dataset. Based on the posted screenshot it seems the host memory peaks at ~90GB and stays at this level during the training which might be expected depending on the actual training script. The low GPU utilization might be caused by a bottleneck e.g. in the data loading pipeline. Try to profile your code to narrow down the bottleneck and e.g. try to increase the number of workers in case the data loading could benefit from it.