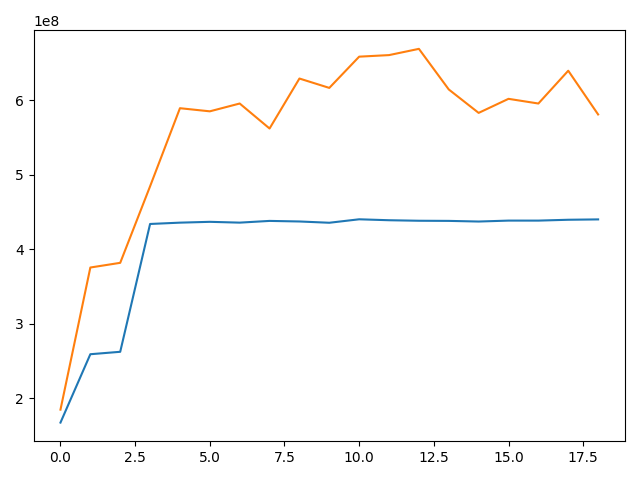

During the ~10 first iterations, the GPU memory increases, and after this it is varying but it seems to remain in the same interval. When I wrote this topic first, I measured the GPU usage only during the first epochs, hence the confusion.

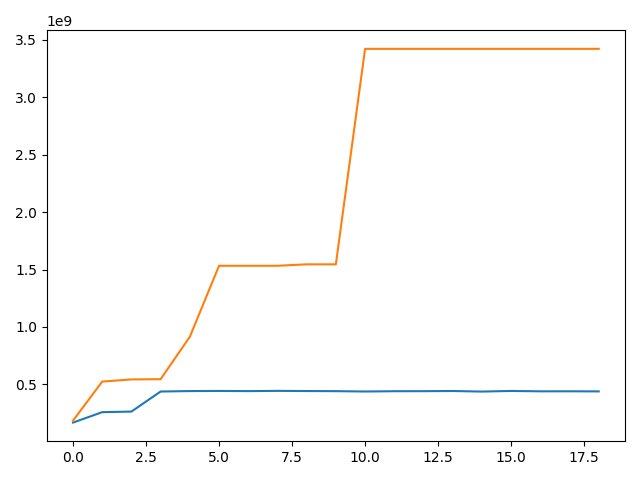

Here is a plot of the evolution of memory allocated (blue) and reserved (orange) as calculated by respectively torch.cuda.memory_allocated() and torch.cuda.memory_reserved() when I don’t call torch.cuda.empty_cache() at the end of each iter.

Here is a similar plot when I call torch.cuda.empty_cache() at the end of each iter:

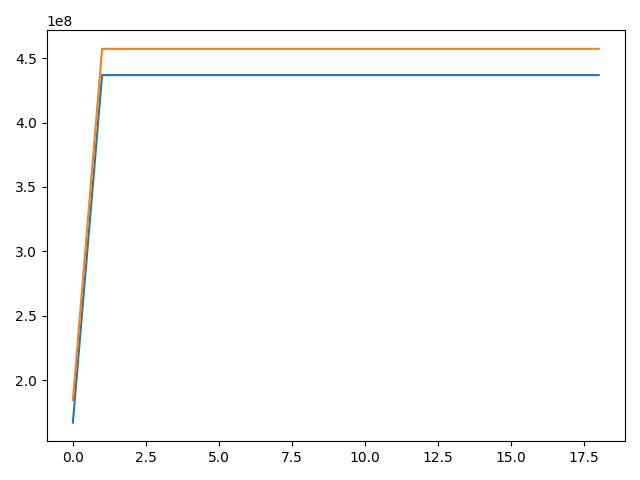

Finally, here is the GPU memory usage when I don’t use apex and I call empty_cache() at the end of each epoch:

Both allocated and reserved memories are higher using apex. I used opt_level O1.

I am aware that calling torch.cuda.empty_cache() does not reduce the memory usage but I assumed that it would enable me to get more accurate measurements (please tell me if it’s not the case). Before this answer, I only measured the GPU memory usage by looking at nvidia-smi.

Does those elements help you seeing what could be my problem?

Thanks a lot,

Alain