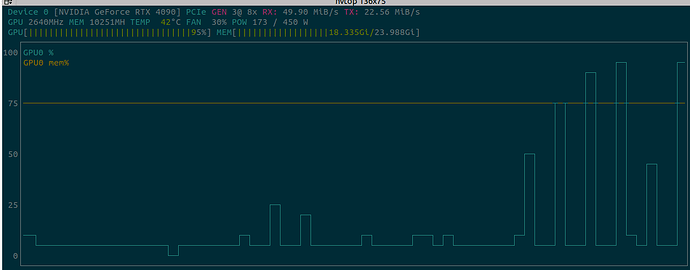

I have an image dataset in HDF5 format. When I load the dataset and begin training, I see <5% GPU utilization, although I see a reasonable 75% memory utilization. I am unable to narrow down the cause for it and I suspect it’s due to the hdf5 data format causing a bottleneck.

Here is the data loader:

class ImageDataset(Dataset):

def __init__(self, filename, transform=None):

self.file_path = filename

self.archive = None

self.transform = transform

with h5py.File(filename, 'r', libver='latest', swmr=True) as f:

self.length = len(f['dataset'])

def _get_archive(self):

if self.archive is None:

self.archive = h5py.File(self.file_path, 'r', libver='latest', swmr=True)

assert self.archive.swmr_mode

return self.archive

def __len__(self):

return self.length

def __getitem__(self, idx):

archive = self._get_archive()

indices = archive['dataset'][str(idx)]

indices = [indices[0].decode('utf-8'), indices[1].decode('utf-8'), int(indices[2].decode('utf-8'))]

image_bin = archive[indices[0]][indices[1]]['scene_left_0'][indices[2]]

img = Image.open(BytesIO(image_bin))

if self.transform:

img = self.transform(img)

return img

def close(self):

self.archive.close()

training_data = ImageDataset('/home/data/data.h5', transform=transform)

train_dataloader = DataLoader(training_data, batch_size=152, shuffle=True, num_workers=12, pin_memory=True)

I have GPU utilization that looks like the attached image: