m_mandel

(M Mandel)

1

Hi,

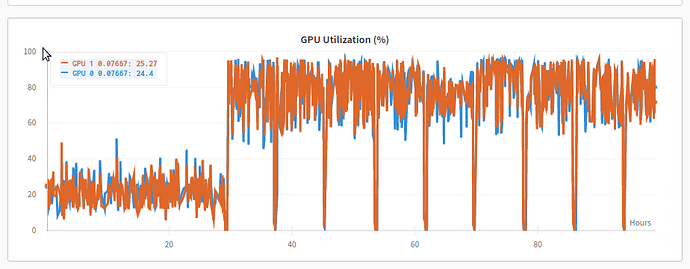

I am running a DDP run with 2 GPUs, and at every 10 epochs I evaluate my test data.

For some reason, the first 10 epochs run very slow - until the evaluation process occurs. Can anyone point me to the reason for this?

Attaching visualization of GPU utilization:

My train/test dataloaders use utils.data.distributed.DistributedSampler as samplers

Can you share a minimal reproducible example?

m_mandel

(M Mandel)

3

At the moment, my code is a bit too complex to share.

I was hoping maybe someone might be able to point in the right direction.

m_mandel

(M Mandel)

4

Hi,

Solved! sort of…

Apparently calling torch.set_num_threads(1) before starting to train solves the problem. Though I have no idea why.

If anyone has any light to shed on this matter, it would be greatly appreciated!

Thanks

mrshenli

(Shen Li)

5

m_mandel

(M Mandel)

6

Thank you! I will look into this.