Hi there!

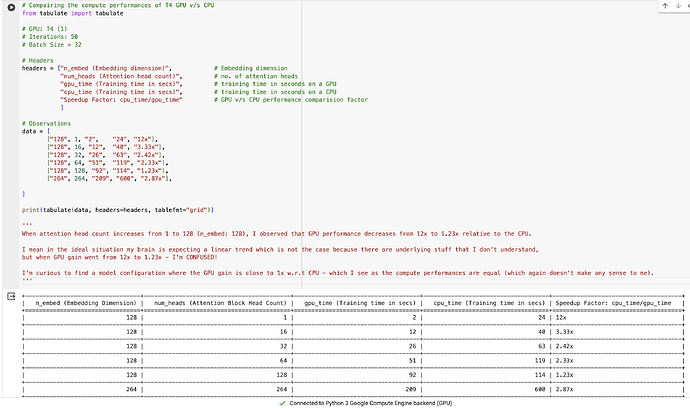

I tried out a little experiment to see how does a GPU and CPU actually impacts the training time of a transformer based language model by changing the number of attention heads to see how it affects the performance.

Here’s what I found:

When attention head count increases from 1 to 128 (n_embed: 128), I observed that GPU performance decreases from 12x to 1.23x relative to the CPU.

I mean in the ideal situation my brain is expecting a linear trend which is not the case because there are underlying stuff that I don’t understand, but when GPU gain went from 12x to 1.23x - I’m CONFUSED!

I’m curious to find a model configuration where the GPU gain is close to 1x w.r.t CPU - case where the compute performances are equal (which doesn’t make any sense to me).

Here’s a google collaboration notebook link: Google Colab

If anyone would like to share their thought process behind this trend, I would appreciate it.

Thanks!