I run into this wired behavior of autograd when try to initialize weights.

Here is a minimal case:

import torch

print("Trial 1: with python float")

w = torch.randn(3,5,requires_grad = True) * 0.01

x = torch.randn(5,4,requires_grad = True)

y = torch.matmul(w,x).sum(1)

y.backward(torch.ones(3))

print("w.requires_grad:",w.requires_grad)

print("x.requires_grad:",x.requires_grad)

print("w.grad",w.grad)

print("x.grad",x.grad)

print("Trial 2: with on-the-go torch scalar")

w = torch.randn(3,5,requires_grad = True) * torch.tensor(0.01,requires_grad=True)

x = torch.randn(5,4,requires_grad = True)

y = torch.matmul(w,x).sum(1)

y.backward(torch.ones(3))

print("w.requires_grad:",w.requires_grad)

print("x.requires_grad:",x.requires_grad)

print("w.grad",w.grad)

print("x.grad",x.grad)

print("Trial 3: with named torch scalar")

t = torch.tensor(0.01,requires_grad=True)

w = torch.randn(3,5,requires_grad = True) * t

x = torch.randn(5,4,requires_grad = True)

y = torch.matmul(w,x).sum(1)

y.backward(torch.ones(3))

print("w.requires_grad:",w.requires_grad)

print("x.requires_grad:",x.requires_grad)

print("w.grad",w.grad)

print("x.grad",x.grad)

The output should be

Trial 1: with python float

w.requires_grad: True

x.requires_grad: True

w.grad None

x.grad tensor([[-0.0267, -0.0267, -0.0267, -0.0267],

[ 0.0040, 0.0040, 0.0040, 0.0040],

[-0.0034, -0.0034, -0.0034, -0.0034],

[-0.0010, -0.0010, -0.0010, -0.0010],

[ 0.0215, 0.0215, 0.0215, 0.0215]])

Trial 2: with on-the-go torch scalar

w.requires_grad: True

x.requires_grad: True

w.grad None

x.grad tensor([[-0.0028, -0.0028, -0.0028, -0.0028],

[ 0.0130, 0.0130, 0.0130, 0.0130],

[-0.0027, -0.0027, -0.0027, -0.0027],

[ 0.0054, 0.0054, 0.0054, 0.0054],

[ 0.0133, 0.0133, 0.0133, 0.0133]])

Trial 3: with named torch scalar

w.requires_grad: True

x.requires_grad: True

w.grad None

x.grad tensor([[ 0.0227, 0.0227, 0.0227, 0.0227],

[ 0.0101, 0.0101, 0.0101, 0.0101],

[-0.0200, -0.0200, -0.0200, -0.0200],

[-0.0052, -0.0052, -0.0052, -0.0052],

[-0.0031, -0.0031, -0.0031, -0.0031]])

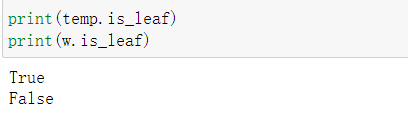

You can see that even with tensors’ requires_grad being True, their grad still is None. Is this a supposed behavior?

I know that adding w.requires_grad_() can solve this problem, but shouldn’t autograd at least change the tensor’s requires_grad to false?