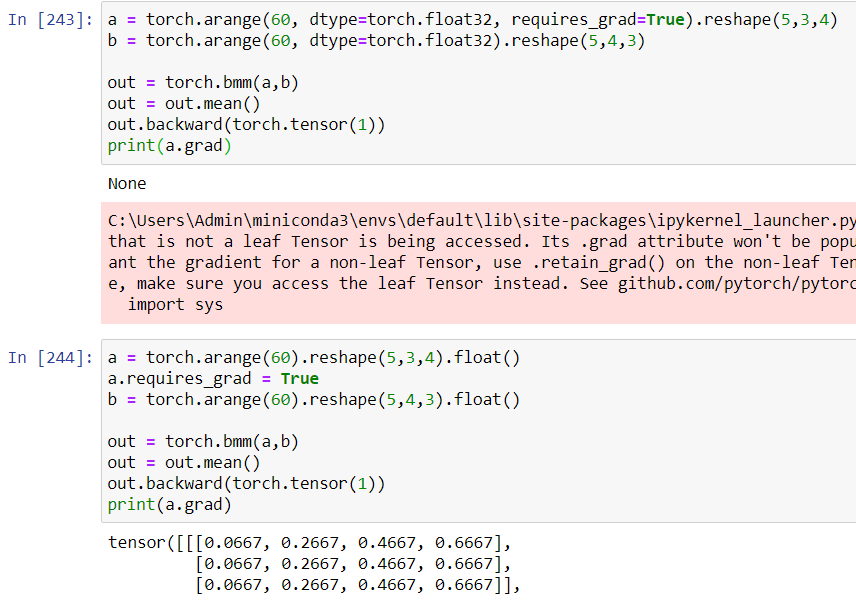

What is the difference? Why is tensor “a” in the first case not a leaf, but in the second case it is a leaf?

In the fist example, the reshape op is applied on the leaf node torch.arange(60, dtype=torch.float32, requires_grad=True), so a is no longer a leaf node (instead it represents the reshape op).

In the second example, changing the flag requires_grad of the node a doesn’t add an additional op on top it (a is still a leaf) so after the backward call, grad is properly assigned.

Note: to get a grad of the non-leaf node, use register_hook.

1 Like

Note that you don’t need to have a full hook. You can just call a.retain_grad() and the .grad field will be populated automatically when you call .backward().It is very similar to the hook, just slightly simpler and you don’t have to define the hook yourself.

2 Likes