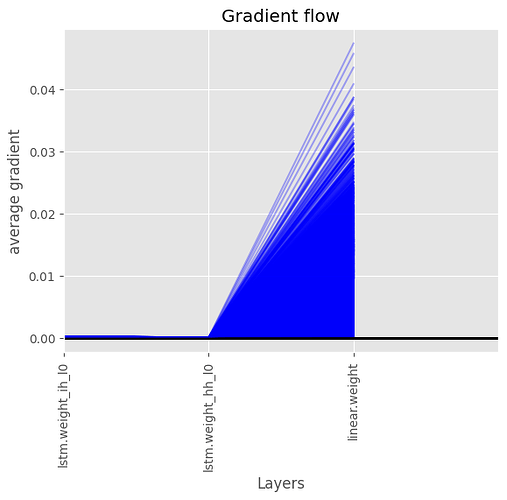

I am training two simple NN one is a simple RNN network other is a LSTM based network, But after computing the gradients and plotting them, i am getting almost zero gradient flow in case of LSTM

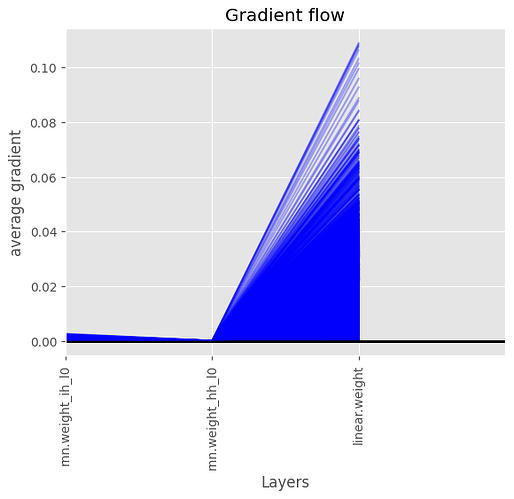

almost similar to rnn

These are the simple models that i have used

class RNNModel(nn.Module):

def __init__(self, input_dim, hidden_dim, layer_dim, output_dim, num_layers=1):

super(RNNModel, self).__init__()

self.hidden_dim = hidden_dim

self.layer_dim = layer_dim

self.rnn = nn.RNN(input_size=input_dim, hidden_size=hidden_dim, num_layers=num_layers, batch_first=True)

self.linear = nn.Linear(hidden_dim, output_dim)

def forward(self, x):

x, _ = self.rnn(x)

x = self.linear(x)

return x

class LSTMModel(nn.Module):

def __init__(self, input_dim, hidden_dim, layer_dim, output_dim, num_layers=1):

super(LSTMModel, self).__init__()

self.hidden_dim = hidden_dim

self.layer_dim = layer_dim

self.lstm = nn.LSTM(input_size=input_dim, hidden_size=hidden_dim, num_layers=num_layers, batch_first=True)

self.linear = nn.Linear(hidden_dim, output_dim)

def forward(self, x):

x, _ = self.lstm(x)

x = self.linear(x)

return x

Can anyone tell me why the gradient is so bad in case of lstm which i think shold be better than rnn