Hi, I’m struggling to make my deep learning network for A2C model.

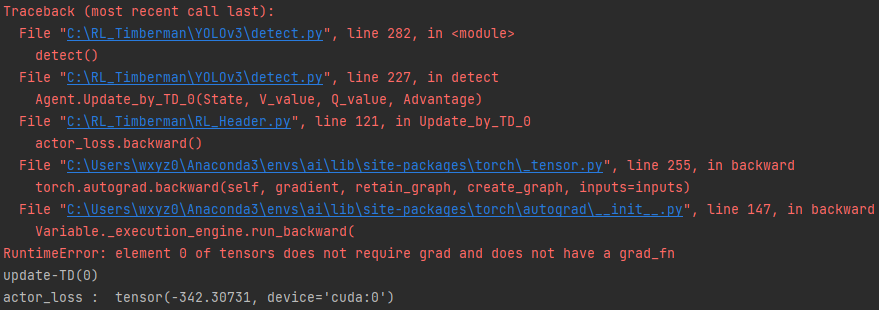

My networks can’t be updated bcz of error displayed bottom image.

I can’t understand what is the cause of this problem.

Does anyone know about this problem in detail?

(I already check my Critic-network gradient check by torch.autograd.gradcheck. it returns ‘True’.)

This is my network

class Actor_network(nn.Module):

"""Actor"""

'''model = nn.Sequential()

model.add_module('fc1', nn.Linear(STATE_DIM, NODES))

model.add_module('relu', nn.ReLU())

model.add_module('fc2', nn.Linear(NODES, 2))

model.add_module('soft', nn.Softmax(dim=0))

return model'''

def __init__(self):

super(Actor_network, self).__init__()

self.fc1 = nn.Linear(STATE_DIM, NODES)

self.fc2 = nn.Linear(NODES, 2)

def Forward(self, x):

x = F.relu(self.fc1(x))

x = F.softmax(self.fc2(x), dim=0)

return x

class Critic_network(nn.Module):

"""Critic"""

'''model = nn.Sequential()

model.add_module('fc1', nn.Linear(STATE_DIM, NODES))

model.add_module('relu1', nn.ReLU())

model.add_module('fc2', nn.Linear(NODES, 1))

return model'''

def __init__(self):

super(Critic_network, self).__init__()

self.fc1 = nn.Linear(STATE_DIM, NODES)

self.fc2 = nn.Linear(NODES, 1)

def Forward(self, x):

x = F.relu(self.fc1(x))

x = self.fc2(x)

return x