Hi,

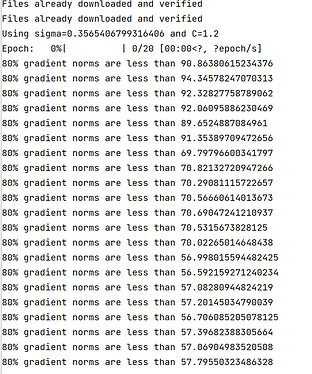

I’m using Opacus to implement DPSGD in my program. One thing I noticed is that the gradients to be clipped are much larger than the max_grad_norm, as the screenshot from the console output.

The code is from the opacus tutorial opacus/tutorials/building_image_classifier.ipynb at main · pytorch/opacus · GitHub I only modified the MAX_PHYSICAL_BATCH_SIZE to fit in my machine. I print the per_sample_norms in the clip_and_accumulate function in the DPOptimizer class of Opacus by following code,

def clip_and_accumulate(self):

per_param_norms = [g.view(len(g), -1).norm(2, dim=-1) for g in self.grad_samples]

per_sample_norms = torch.stack(per_param_norms, dim=1).norm(2, dim=1)

per_sample_clip_factor = (self.max_grad_norm / (per_sample_norms + 1e-6)).clamp(

max=1.0 )

print(f'80% gradient norms are less than {np.percentile(per_sample_norms.cpu(), 80)}')

for p in self.params:

_check_processed_flag(p.grad_sample)

grad_sample = _get_flat_grad_sample(p)

grad = torch.einsum("i,i...", per_sample_clip_factor, grad_sample)

if p.summed_grad is not None:

p.summed_grad += grad

else:

p.summed_grad = grad

_mark_as_processed(p.grad_sample)

And get the following results, the per_sample_norms are much larger than the clip bound, which is C=1.2.

Can somebody tell me why? This is strange because the gradient before the clip is much larger than the clip bound.

Thanks in advance