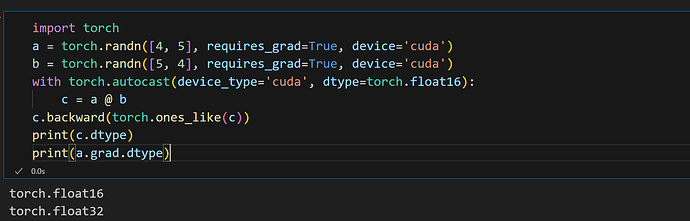

When I use torch.cuda.amp.autocast, the output is fp16, but the gradients are not fp16. Why?

import torch

a = torch.randn([4, 5], requires_grad=True, device='cuda')

b = torch.randn([5, 4], requires_grad=True, device='cuda')

with torch.autocast(device_type='cuda', dtype=torch.float16):

c = a @ b

c.backward(torch.ones_like(c))

print(c.dtype)

print(a.grad.dtype)