Hello everyone,

I’m trying to classify some drugs like very active, active, non active (label: 0, 1, 2) against the cancer. To do that I built a Graph Convolutional Network using PyTorch Geometric, this is the code:

n_features = 14

# definenet

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = GCNConv(n_features, 50, cached=False)

self.bn1 = BatchNorm1d(50)

self.conv2 = GCNConv(50, 25, cached=False)

self.bn2 = BatchNorm1d(25)

self.fc1 = Linear(25, 25)

self.bn3 = BatchNorm1d(25)

self.fc2 = Linear(25, 25)

self.fc3 = Linear(25, 3)

def forward(self, x, edge_index, batch):

x = F.relu(self.conv1(x, edge_index))

x = self.bn1(x)

x = F.relu(self.conv2(x, edge_index))

x = self.bn2(x)

x = global_add_pool(x, batch)

x = F.relu(self.fc1(x))

x = self.bn3(x)

x = F.relu(self.fc2(x))

x = F.dropout(x, p=0.2, training=self.training)

x = self.fc3(x)

x = F.softmax(x, dim=1)

return x

My dataset contain 2476 samples where:

- 0, 1184 samples;

- 1, 826 samples;

- 2, 466 samples.

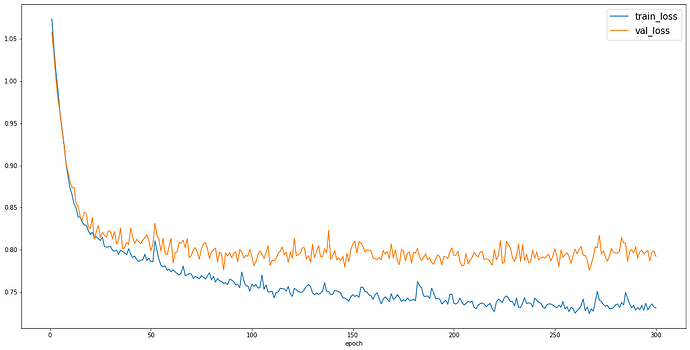

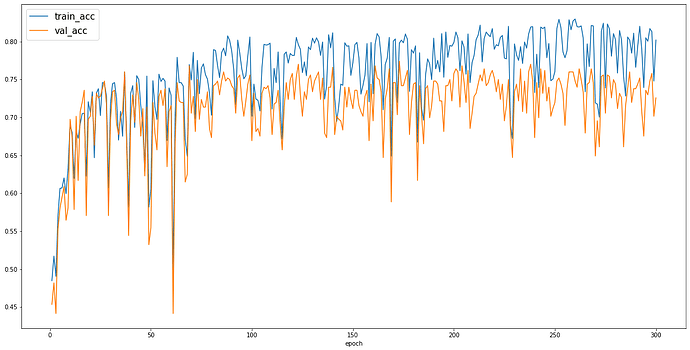

Every nodes of a graph contain a nodes features matrix with 14 features. When I train the net the loss seems to go down well, but the accuracy grows slowly and very badly. Below I show you the charts of the loss (train and val), of the accuracy (train and val) and the training loop that I use. What could I do to improve my model’s performance? Thank you all!

model = Net()

optimizer = torch.optim.Adam(model.parameters(), lr=0.001,

weight_decay=0.0001)

criterion = torch.nn.CrossEntropyLoss()

def train():

model.train()

loss_all = 0

for data in train_loader:

optimizer.zero_grad()

output = model(data.x, data.edge_index, data.batch)

loss = criterion(output, data.y)

loss.backward()

loss_all += loss.item() * data.num_graphs

optimizer.step()

return loss_all / len(train_loader.dataset)

def test_loss(loader):

total_loss_val = 0

with torch.no_grad():

for data in loader:

output = model(data.x, data.edge_index, data.batch)

batch_loss = criterion(output, data.y)

total_loss_val += batch_loss.item() * data.num_graphs

return total_loss_val / len(loader.dataset)

def test(loader):

model.eval()

correct = 0

for data in loader:

output = model(data.x, data.edge_index, data.batch)

pred = output.max(dim=1)[1]

correct += pred.eq(data.y).sum().item()

return correct / len(loader.dataset)

hist = {"train_loss":[], "val_loss":[], "acc":[], "test_acc":[]}

for epoch in range(1, 301):

train_loss = train()

val_loss = test_loss(val_loader)

train_acc = test(train_loader)

test_acc = test(val_loader)

hist["train_loss"].append(train_loss)

hist["val_loss"].append(val_loss)

hist["acc"].append(train_acc)

hist["test_acc"].append(test_acc)

print(f'Epoch: {epoch}, Train loss: {train_loss:.3}, Val loss: {val_loss:.3}, Train_acc: {train_acc:.3}, Test_acc: {test_acc:.3}')