Hi everyone,

I am using a GCN model to perform node classification.

The implementation of the GCN model was found in the following repo: “https://colab.research.google.com/github/zaidalyafeai/Notebooks/blob/master/Deep_GCN_Spam.ipynb#scrollTo=Hhabp4QvoP6V”

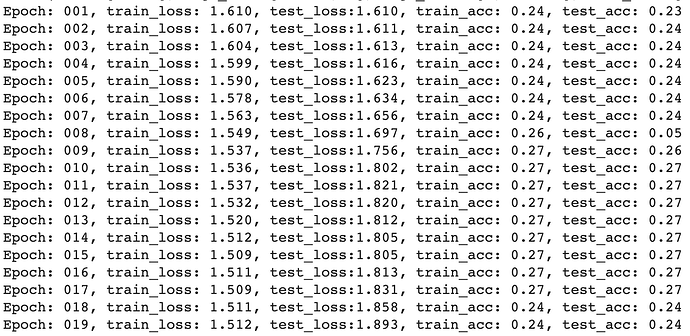

The issue is that the accuracy I obtain is about 0.22 and the model does not seem the learn from the graph data. A random classifier would indeed give an accuracy close to 0.2 since there are 5 classes in my data.

The implementation of the GCN model is:

class Net(nn.Module):

def init(self):

super(Net, self).init()

self.conv1 = SplineConv(1, 16, dim=1, kernel_size=5)

self.conv2 = SplineConv(16, 32, dim=1, kernel_size=5)

self.conv3 = SplineConv(32, 64, dim=1, kernel_size=7)

self.conv4 = SplineConv(64, 128, dim=1, kernel_size=7)

self.conv5 = SplineConv(128, 128, dim=1, kernel_size=11)

self.conv6 = SplineConv(128, 5, dim=1, kernel_size=11)

self.dropout = 0.25

def forward(self, batch):

x, edge_index, edge_attr = batch.x, batch.edge_index, batch.edge_attr

# batch = batch.batch

x = F.elu(self.conv1(x, edge_index, edge_attr))

x = self.conv2(x, edge_index, edge_attr)

x = F.elu(self.conv3(x, edge_index, edge_attr))

x = self.conv4(x, edge_index, edge_attr)

x = F.elu(self.conv5(x, edge_index, edge_attr))

x = self.conv6(x, edge_index, edge_attr)

# x = pyt_geom.global_mean_pool(x, batch)

x = F.dropout(x, training=self.training)

output = F.softmax(x, dim=1)

return output

The rest of the implementation is the same as the code in the repo link.

The graph data has the following format:

“Batch(batch=[43267], edge_attr=[475194, 1], edge_index=[2, 475194], x=[43267, 1], y=[43267])” where the edge attributes and node features are set to 1. They were set to 1 because the only data I have is the edge list of the network.

Thank you for your help!