Hello,

I’m trying to implement Alex Grave’s handwriting synthesis network. Having already implemented section 4 of the paper, I’m trying to implement an attention mechanism (section 5.1 of the paper) on top of that so the network can learn which character it’s writing.

- My implementation of section 4 : [Handwriting prediction - Model 2.ipynb]

- My implementation of section 5 : [Handwriting synthesis.ipynb]

I have detailed my notebooks as much as possible.

The two networks are rougly the same apart from the attention mechanism. In short, I have a 3 hidden layer LSTM network that gets as inputs coordinates of handwritten sequences. The attention mechanism is build between LSTM1 and LSTM2 using one-hot encoding of the sequence. My first notebook works properly that’s why I’m almost sure the attention mechanism is at fault.

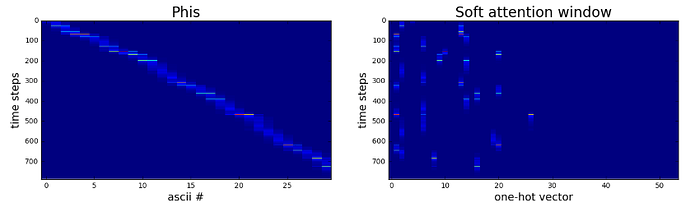

I’ve left the training process in my synthesis notebook (the second one) so you can visualize how the prediction model evolves over time. It seems to progressively vanish over time and at one point the loss value just takes off to a “nan”.

Clearly something is off. I’ve tried messing with the weights initialization of the attention section as this has a great impact on how my attention windows initially behave. But it doesn’t look like it’s learning anything. My heatmaps should look more like something like this :

My implementation of the equations might be off but I’ve rechecked so many times I can’t find an error.

This is a pretty specific question so if I’m not in the right place, sorry in advance ![]() . I’d appreciate any help and would be happy to elaborate.

. I’d appreciate any help and would be happy to elaborate.

Thanks’ !

(If you want to try the notebooks, downloading the whole deposit should get you started considering you have the right dependencies)