I am a music producer and on our studio’s computers, we have hundreds of music software installed and configured in the past years, and therefore we are unable to change computers easily.

Our Windows computers are with Nvidia GTX 660M GPU, and therefore the highest Nvidia Driver we could install is version 426.00, and the highest CUDA we could install is version 10.1 Update 2.

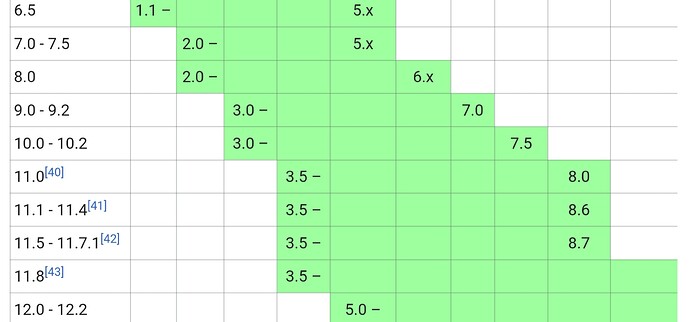

Recently, we are working with an AI music software, which requires PyTorch 1.10.0 or above. Unfortunately, there are no Torch binaries >=1.10.0 built with CUDA 10.1 of which can be downloaded as wheel files, so I have to build from source by myself.

No big deal. I have successfully built PyTorch 1.9.1 from source on CUDA 10.1 in the past.

The problem is, when I use the same environment to build PyTorch 1.10.0, or any versions above, cmake gives me an error, saying “CUDA 10.2 is needed”, and shuts down the build process.

The error message is as below.

-- Found CUDA: C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v10.1 (found version "10.1")

-- Caffe2: CUDA detected: 10.1

-- Caffe2: CUDA nvcc is: C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v10.1/bin/nvcc.exe

-- Caffe2: CUDA toolkit directory: C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v10.1

CMake Error at cmake/public/cuda.cmake:42 (message):

PyTorch requires CUDA 10.2 or above.

Call Stack (most recent call first):

cmake/Dependencies.cmake:1191 (include)

CMakeLists.txt:653 (include)

Seriously?! Isn’t “PyTorch Build from Source” the whole purpose of building any PyTorch on any CUDA? I’ve heard many people successfully built PyTorch >=1.10.0 on CUDA 10.1, but how did they make it?

So, is there an argument or command prompt I can type in, to bypass this CUDA version compatibility check, so that a newer PyTorch can be built on an older CUDA without being interrupted?

After all, “not being supported” doesn’t mean “guaranteed not working”, right?

What I want, is to build this file first, <torch-1.10.0+cu101-cp38-cp38-win_amd64.whl>, pip install it, then I can have a say if it’s working or not.

In my case, how to force PyTorch stop nagging and do her job?!