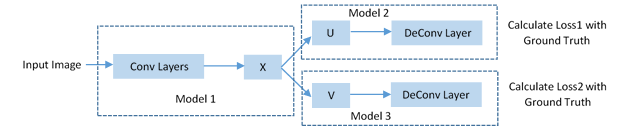

I am working on a model which looks like below fig:

Input image (I) is fed to the system which goes through some convolution layers and produces feature vector X. The feature vector X is then connected to two fully connected layers U and V. U and V are then connected to a series of deconvolution layers that generate some image. I have different losses and ground truths at the end of U and V.

The problem is that I am not able to understand how to perform the backpropagation in such a model. How would I be able to combine the two gradients when they backpropagate from U->X and V->X? The idea is to simply add the two gradients, but I am not able to understand how to achieve that.

This is my first question in PyTorch discussion. Let me know if I am unclear and if you need more details.