Hello everyone,

Let’s say I have the following rnn.

self.rnn = torch.nn.GRU(

input_size=1024,

hidden_size=512,

num_layers=2,

batch_first=True

)

What does it mean ?

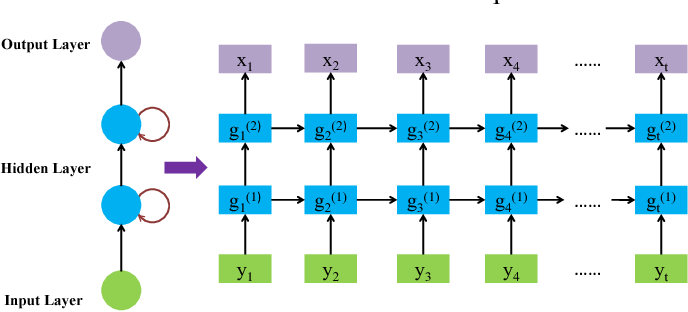

I have a basic understanding of LSTMs. the input x_t goes through a GRU, then the hidden state h_t is updated to h_{t+1} and fed into the next unit with input x_{t+1]. In that regard, I understand the hidden size (the size of my input sequence) but I don’t understand the hidden_size and the number of layers. I’m sure just seeing a relevant picture would help me, can you help me out ?

the hidden size is the output size of rnn cell.

if the num_layer >= 2, the output of the previous layer is the input of next layer.

Is this picture accurate ?

Is ‘g(1)’ always the same cell with the same weights. Its output is the hidden state ? Is the arrow on the right and the arrow on the top feeding the hidden state to both the next timestep and the next layer ?

yes, the output of g^(1) is the hidden state.

the arrow on the top feeds the hidden state to the next layer.

For the arrow on the right, it feeds the hidden state and cell state (if cell is lstm cell); it feeds the hidden state (if cell is gru cell).

u can read this

Thank you I got it !