EDIT: Lowering the learning rate all the way down to 0.0001 seems to make a big difference here. And changing the reinforce part away from pytorch’s example to another implementation seems to help but haven’t figured out all the details yet.

I have a toy RL project implementing he REINFORCE algorithm with a policy gradient agent that seems to be consistently learning to choose actions that reliably generate negative rewards. Are there any likely reasons this could be so?

Additional details below. The rules of the environment are of my own design. Since that makes it obviously hard to diagnose, I’ll take whatever high or low granularity suggestions I can get.

My environment is a 25x25 grid upon which a bot can move and attempt to acquire a target.

Bots can choose the following 17 actions:

-

Do nothing

-

Move 1 space (in any of the 8 directions surrounding it)

-

Attempt to acquire a target 1 space away (in any of the 8 directions surrounding it)

The reward system is such that:

-

+1 for moving closer to any target

-

-1 for moving farther away from the targets

-

+10 for acquiring a target

-

-0.5 for any invalid action (i.e. moving outside the grid, aiming where a target doesn’t exist)

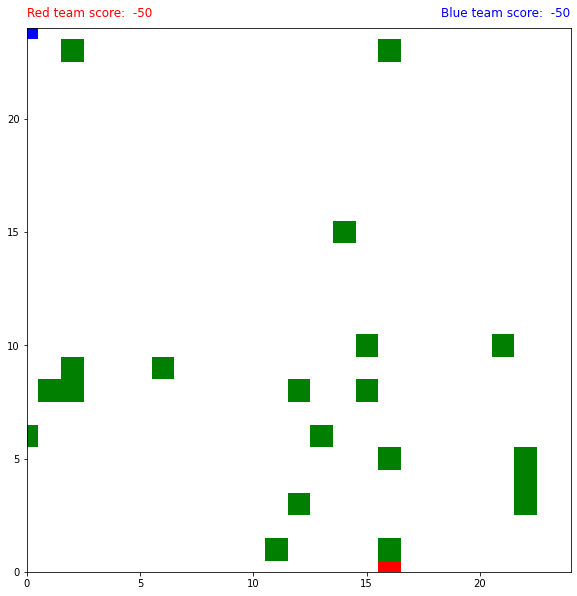

Somehow, the bots almost always seem to learn the behavior of constantly heading in the direction of a wall. You can see this in the attached image.

There are two bots, each on “teams” (red and blue), but this is just for future implementation and doesn’t have any effect on their policy. Green are the targets.

Here is the link to the full code: Google Colab

but since that’s long I’ll try and highlight what I imagine are the relevant bits. Here’s the forward/action pass of the network:

def forward(self, x):

# Run through all of our layers defined above

x = ... # cut for brevity

x = F.softmax(self.linear_final(x), dim=1)

return x

def decide_action(self, x):

# Create the prob distribution from output and return the action/logprob

probs = self.forward(x)

prob_dist = Categorical(probs)

action = prob_dist.sample()

action_item = action.item()

logp = prob_dist.log_prob(action)

return action_item, logp

And here’s the reinforce part:

# Function to calculate loss and update bot network

def reinforce_bot(b, debug):

# Setup lists and vars to work off

discounted_reward = 0

l_returns = []

l_policy_loss = []

# Work through the bot's episode rewards backwards

# The net effect of this will be such that we built rewards for only actions and their following rewards

# (i.e. action for step n only gets rewards for steps > n, never steps < n)

# Additionally we'll build in our reward discounting (where future steps contribute less to overall reward)

for reward in b.l_episode_rewards[::-1]:

discounted_reward = reward + gamma * discounted_reward

l_returns.insert(0, discounted_reward) # but insert back at the beginning to get correct order

# Now turn the rewards into a tensor for working with gradient

t_returns = torch.tensor(l_returns)

# But standardize the rewards to stabilize training

t_returns = (t_returns - t_returns.mean()) / (t_returns.std())

# Now build up our actual policy loss by multiplying it by our logprobs

for logp, discounted_reward in zip(b.l_episode_log_probs, l_returns):

l_policy_loss.append(-logp * discounted_reward)

# Zero our gradient to get ready for backprop

b.optimizer.zero_grad()

# Technically our l_policy_loss is a list of tensors, so smoosh those together

# Then sum to get the total loss

policy_loss = torch.cat(l_policy_loss).sum()

# Now run our optimizer

policy_loss.backward()

b.optimizer.step()

# Text to print some helpful debugging

if debug:

print(f'{b.team} had awards array of {b.l_episode_rewards}')

print(f'{b.team} had l_returns of {l_returns}')

#print(f'{b.team} had l_policy_loss of {l_policy_loss}')

print(f'{b.team} had a policy loss of {policy_loss}')

# And cleanup our epsiode tracking lists now since we don't need them

del b.l_episode_rewards[:]

del b.l_episode_log_probs[:]

Various debugging notes:

-

The mapping of reward and actions within the environment is correct as best I can tell. If I comment out the network updating lines, the bots take all random actions and receive the appropriate rewards.

-

Swapping the sign of the rewards very strangely doesn’t help (the bots still move to the edges). It seems this reliably yields some reward, and it’s as if the bots are just trying to maximize the absolute value of reward received.

Very grateful for any wisdom I can get. This one has me plain stuck.